By Esther Chan, Lucinda Beaman and Stevie Zhang

Introduction

Earlier this year, as the rollout of Covid-19 vaccines created new questions and fertile ground for information disorder, Facebook announced an expansion of its policies on vaccine misinformation. While the effectiveness of these measures will take time to assess, First Draft research has put the spotlight on an area where misinformation is hiding in plain sight: comment sections.

Comment sections are an area often overlooked in policy responses and while they have to abide by Facebook’s overall policies on vaccine misinformation, they are not subjected to warning labels applied by Facebook’s third-party fact-checking partners. Meanwhile, moderation by news outlets and public health organizations often isn’t effective at keeping misinformation out of comment sections.

With an aim to better understand how vaccine misinformation is shared and how filter bubbles and echo chambers emerge in Facebook comment sections, First Draft conducted a data analysis of the comments on a small selection of posts containing the word “vaccine” from two popular commercial Australian news organizations, which together are followed by more than three million users. The Facebook Pages of 9News, with more than two million followers, and Sky News Australia, with more than a million followers, were chosen as they are high-traffic, high-engagement Pages run by news organizations.

While Facebook’s policies on the removal of vaccine misinformation do apply to comment sections, our study finds that misleading claims about vaccines in these forums remain prevalent. On average, one in five comments on the Facebook posts we analyzed contains misinformation about Covid-19 vaccines or the pandemic itself.

This research focuses on a limited case study as outlined and does not attempt to describe the overall scale of vaccine misinformation on the platform or target any publishers in their content moderation practices on social media.

Key findings

Comments on the Facebook Pages of the news organizations First Draft studied included skepticism and fears related to the safety and efficacy of the vaccines — some of which were founded on distrust in authorities, as well as harmful mis- and disinformation and conspiracy theories about vaccines and the pandemic in general. For example:

“We do not want the poison vaccines in our body!! We have immune system! How many people died from this jab and how many will …. This is depopulation by vaccination and yes they all in it together!”

These and other false claims abound, regardless of whether they’re attached to posts containing accurate information. A space where people can freely comment could well encourage practical, constructive discussions, but First Draft’s research, specifically on Pages run by news organizations, has found that comment sections can act as an echo chamber for those skeptical about vaccines, and prove to be fertile ground for the spread of misinformation.

Our analysis began on April 16 using the five most recent posts from each news organization that featured the word “vaccine,” and all ten posts were published April 15 and 16. Our team then manually identified comments that contained vaccine misinformation based on parameters that are in line with Facebook’s Community Standards. By comparing the number of comments that contained vaccine misinformation and the total number of comments on the five posts from each Page, we found that around 26 percent of comments on 9News’s posts and 19 percent of those on Sky News Australia’s posts contained misinformation about the Covid-19 pandemic or vaccines.

Across these ten Facebook posts containing the word “vaccine” from 9News and Sky News Australia between April 15 and 16, our analysis found that 591 out of a total of 2,913 comments contained Covid-19 misinformation. In other words, roughly one out of five comments on these ten Facebook posts was misleading or contained false claims about the Covid-19 vaccines or the pandemic.

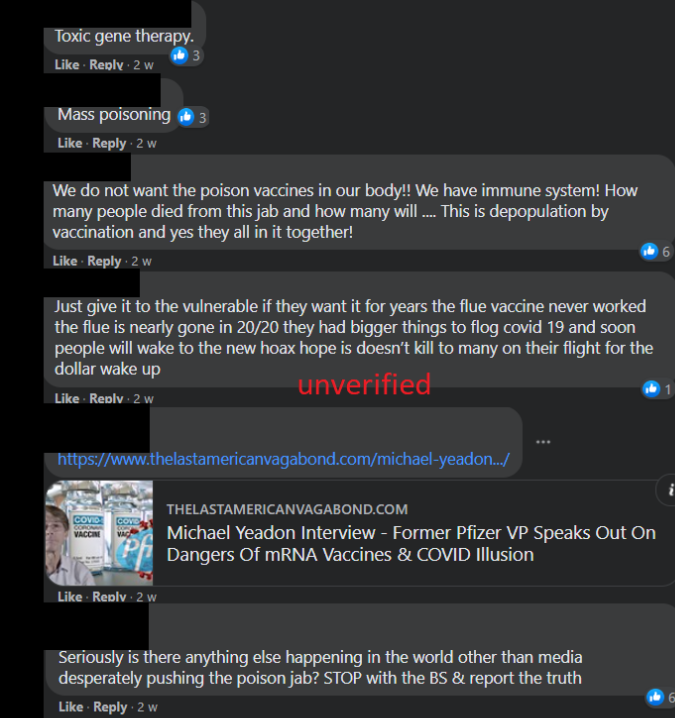

The post with the highest percentage of misinformation in its comment section was published by Sky News Australia on April 15 with a news segment about vaccine rollout for Australia’s regional communities. Twenty-two of the 37 comments contained false or misleading claims or conspiracy theories about the vaccine. A dominating theme among the comments containing misinformation included false statements that the vaccines are “poisoning” people or are a “toxic gene therapy”.

Screenshot captured May 5 shows comments on Sky News Australia’s April 15 Facebook post featuring a news segment about vaccine rollout for regional communities

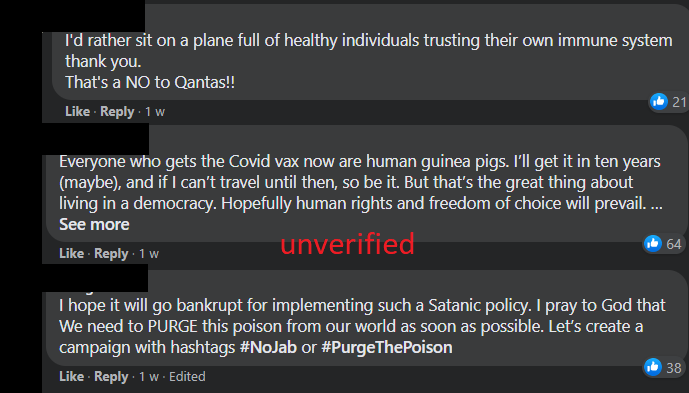

The post with the lowest percentage of comments containing misinformation was this post from Sky News Australia about Qantas Airways’ plan to allow only vaccinated people to fly with it. Of the 2,001 comments, around 15 percent contained misinformation about vaccines. However there is more to the picture.

While many of the remaining comments simply expressed disagreement with Qantas’ plan, our research however, discovered other problems: there was a high number of derogatory comments about Qantas CEO Alan Joyce’s sexuality and Irish nationality. Expressions of contempt, including homophobia, and attacks against people based on their national origin are both in violation of Facebook’s Community Standards on hate speech.

Screenshot captured May 2 shows comments on Sky News Australia’s April 15 Facebook post on Qantas’ proposed vaccination-related policy

Vaccine misinformation in comment sections is not unique to the Facebook Pages of Australian news publishers. As First Draft’s researchers have found, Facebook posts containing the word “vaccine” from news outlets in the rest of the APAC region — such as NDTV and Republic TV in India, as well as EMTV and NBC in Papua New Guinea — also contain misinformation in the comment sections to varying degrees.

Implications

This snapshot analysis highlights two challenges to efforts against misinformation in Facebook’s comment sections. The first is that comments, rather than the posts to which they are attached, can be the main source of misinformation — and a source that often goes undetected. Users scrolling to read comments might miss warning labels attached to the post, or the context and details the post itself has to offer. Studies have also shown that Facebook users tend to interact with posts that contain links without reading the linked article, much less finishing it.

The second challenge is that online comments, particularly those contradicting the news article in the post, can affect readers’ opinions more than the article itself. In order to combat misinformation about Covid-19 and vaccines, audiences are often told to seek out reputable sources, such as official medical advice or mainstream media outlets. While these outlets’ reporting may be accurate, unmoderated comment sections may nonetheless expose readers to misinformation, even those actively seeking verified information.

Tackling these issues will require efforts from both Facebook and publishers. A Facebook spokesperson told First Draft on April 28, “Community Standards apply to everyone, all around the world, and to all types of content. People can report potentially violating content, including Pages, Groups, Profiles, individual content and comments.”

The spokesperson added that “since the pandemic began, we’ve also used our current AI systems and deployed new ones to take COVID-19-related material our fact-checking partners have flagged as misinformation and then detect copies when someone tries to share them.” Our study, however, found comments that violate Facebook’s policy on vaccine misinformation remain on the posts.

Further research is needed to assess whether this combination of human and artificial intelligence is effective to detect and respond to misinformation on Facebook, which experts say lacks the technology to enforce its content moderation policies.

What this means for news publishers

Another layer of moderation lies with the page owners — in this case, news publishers. According to Facebook, publishers have control over who can comment on their posts and what comments are visible to other visitors by hiding or deleting a comment. An announcement from Facebook on March 31 offered more control over comments on public posts, allowing publishers to “further control how you want to invite conversation onto your public posts and limit potentially unwanted interactions.”

To limit exposing their audiences to misinformation, news organizations should arguably moderate their comments sections, but the sheer number of comments means this places a massive burden on newsrooms. First Draft approached Sky News Australia and 9News regarding their comment moderation policies. Sky News declined to comment and 9News had not responded at the time of publication.

Dr. Derek Wilding, co-director of the Centre for Media Transition at the University of Technology Sydney, said there are currently no laws in Australia specifically covering online comments, although defamation law might apply, as could restrictions on conduct such as inciting violence.

“Regulation applying to user comments is patchy because there’s no central source of regulation for online content generally,” Wilding said.

Conclusions

Our findings point to a gap between Facebook’s misinformation policies and outcomes that the company should work to improve, and to a need for greater content moderation by publishers.

In a statement to First Draft, Josh Machin, head of public policy for Facebook in Australia, said that “misinformation is a highly adversarial and ever-evolving space, which is why we continue to consult with outside experts, grow our fact-checking program, and improve our internal technical capabilities.”

As First Draft previously recommended, platforms would do well to institute regular independent audits to ensure their policies are working. The idea of free speech and the responsibilities of social media and news companies are difficult areas to navigate. Yet in the midst of a pandemic, it is critical for platforms and publishers to assess how discussions taking place in comment sections might shape attitudes and behaviors, and to make timely, rigorous decisions to stop the spread of harmful information.

Methodology

Using the online tool Export Comments, all comments from the five latest posts published by 9News and Sky News Australia containing the word “vaccine” up until April 16 were extracted for analysis, excluding spam and comments from private or suspended/disabled accounts. Our team then manually identified comments that contained Covid-19 and vaccine misinformation based on parameters that are in line with Facebook’s Community Standards; assessed the percentage of comments that contained vaccine misinformation in each of the ten posts; and studied the themes.

To add to the context that misinformation in Facebook comment sections is not confined to Australia, similar observations from First Draft’s previous research on the Facebook Pages of selected news outlets in India and Papua New Guinea were also mentioned.

This analysis provides a snapshot of vaccine misinformation in Facebook’s comment sections based on a limited case study. The overall scale of misinformation on the platform, the effectiveness of its policy as well as the levels of content moderation from publishers require further study.

Anne Kruger, Ali Abbas Ahmadi and Carlotta Dotto contributed to this report.

Stay up to date with First Draft’s work by becoming a subscriber and following us on Facebook and Twitter.