![]() CrossCheck is an online verification collaboration that began in February 2017. Our first project brought together 37 newsroom and technology partners in France and the UK to help accurately report false, misleading and confusing claims that circulated online in the ten weeks leading up to the French Presidential election in May 2017.

CrossCheck is an online verification collaboration that began in February 2017. Our first project brought together 37 newsroom and technology partners in France and the UK to help accurately report false, misleading and confusing claims that circulated online in the ten weeks leading up to the French Presidential election in May 2017.

The aim of CrossCheck is to provide the public with the necessary information to form their own conclusions about the information they receive. By working collaboratively, we hope to more quickly ascertain what is not factual or reliable, and to give that information to newsrooms and the public.

How the project started

Our CrossCheck newsroom and technology partners.

In November and December 2016, 63 of our partners met at three different meetings to discuss the growing problem of misinformation. Many people suggested working on a project related to the French election because of the pivotal nature of the elections to the whole of Europe. The geographical size of France also felt manageable, which was an important component for a first-time effort with a lean staff.

The project was the brainchild of Jenni Sargent. She ran a four-hour meeting in Paris on January 6 with more than 40 French journalists from different newsrooms, who were originally skeptical about how they could collaborate, particularly on a story as “big” as the Presidential election. But they agreed in principle, and in less than three weeks the project took shape, including the design and build of the website and visual language, deciding on workflows and protocols, hiring project editors, and choosing the technologies that would power the project.

Everything came together for collaborators during a two-day “bootcamp” in a chateau outside Paris where all newsroom partners and project editors were trained in monitoring and verification techniques, to learn how the different tools worked, and to find ways to integrate CrossCheck workflows into their own newsroom systems. More importantly, the time allowed people to get to know each other in person in classrooms, but also over dinner, drinks and even karaoke. Journalists who normally compete, got to know one another at our training. These types of collaborative projects can often focus on the technology, but we also wanted to emphasize the interpersonal elements. One of the project journalists admitted at the recent wrap event, “After the bootcamp, I felt comfortable saying ‘I don’t know” in Slack. I don’t think any of us would have been comfortable saying that if we haven’t spent that time together at the bootcamp.”

How the debunk process worked

We wanted to help the public navigate the misinformation circulating around the election, so we wanted to hear directly from audiences. We created an online form using Hearken, which specializes in audience listening and building. Some questions involved sharing a URL and asking whether the content was true. Other questions were directly related to policy positions or claims.

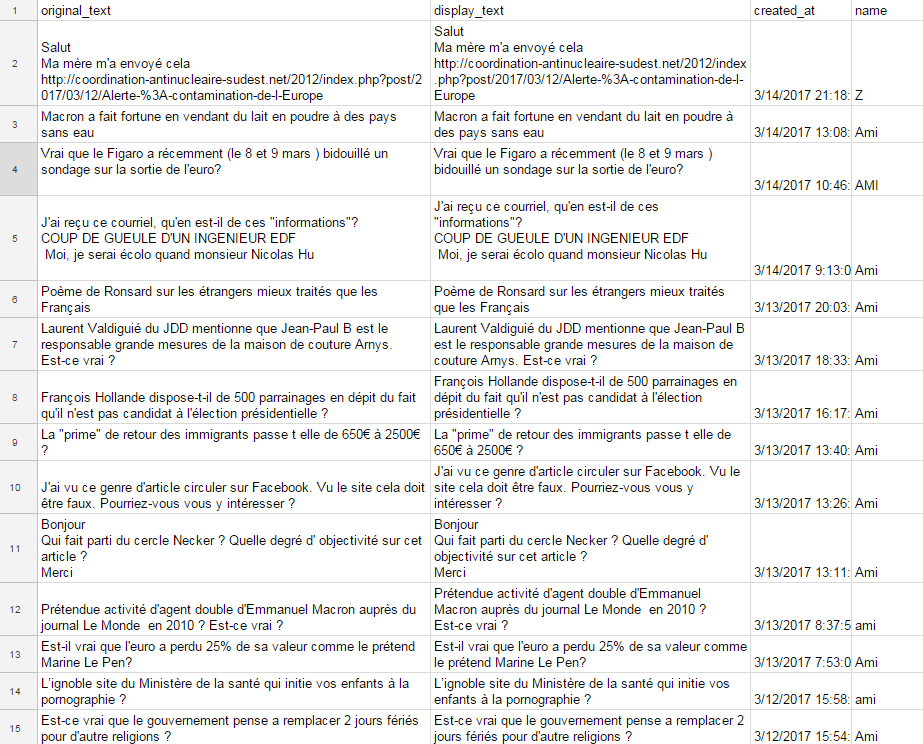

Spreadsheet from a Hearken intake form.

Our Hearken form only required two fields: the person’s question and email address. The questions automatically populated into the collaborative discussion platform Slack, where top project editors selected the most pertinent questions to pursue. The project received more than 584 questions from the public from February 26 to May 8, 2017.

Another crucial element of the project, was including national and regional newsrooms. National partners had the capacity — both in terms of staffing as well as in-house knowledge and skills — to publish all debunks posted to CrossCheck. Smaller newsrooms, benefitted from having access to cutting-edge technology and verification experts to support them in writing articles that were affecting their region in France.

We also placed 12 student as project editors in newsrooms across France to extend CrossCheck’s reach on the ground. Each student received training on a combination of pioneering technologies and advanced search and verification techniques to power their work and to train others within that newsroom on the same tools.

Problematic content surfaced either directly from the audience or via independent monitoring of the social web, which was carried out using advanced search techniques on Tweetdeck, and using Crowdtangle on Facebook and Instagram. The team also used Newswhip to see whether the content was likely to trend, which was a crucial part of the project. Sometimes misinformation was discovered but the team decided not to pursue it for fear of giving more oxygen to a story that was likely to die out of its own accord.

This article was the most popular CrossCheck during the project, based on page views.

Once a decision was made to research a claim or a piece of content, one of the project editors was assigned and the verification or fact-checking work was reported on the Check platform for all partners to see. Everyone was notified on Slack once the work was completed and debates started on Slack between the different newsrooms about how to explain and classify the debunk.

AFP was the editorial lead for the project, and once more than two partners signed off on a report, AFP published the story on the website. By the end of the project, 64 debunks were published on the website, in French and English.

To the right side of each published report on the CrossCheck website, there are logos from each newsroom that participated and confirmed the investigative work. Each report is published with the following distinction: True, False, Caution, Insufficient Evidence and Attention. If the story was false, we further clarified how the story was false — manipulated, fabricated, misattributed, misleading, misreported or satire — to help readers see that there is more nuance to the “fake news” issue than the way it is often described.

As the project progressed and our audience on Facebook grew, we realized we needed more visual content, so some project editors created videos explaining the debunks. This one, created by Juliette Mauban-Nivol, reached more than 1 million people and had more than 800,000 views.

As the project progressed and our audience on Facebook grew, we realized we needed more visual content, so some project editors created videos explaining the debunks. This one, created by Juliette Mauban-Nivol, reached more than 1 million people and had more than 800,000 views.

CrossCheck expectations and results

We had several hunches going into CrossCheck:

- Collaborative journalism helps build capacity around verification, which benefits all newsrooms.

- Having multiple logos next to each published article might boost trust with readers.

- Giving a layered distinction to each post improves news literacy and creates better news consumers.

- By taking readers through a step-by-step analysis of where an online claim was right, and where and how it was false, we could better inform the public.

Did we accomplish any of our goals? We are about to start a three-month period of research to better understand the project’s impact, including:

- How many people did the project reach? Who shared the debunks on social networks? What do the comments tell us about how they were received?

- From a social psychological perspective, did the wording of headlines, graphics and visual vocabulary help or confuse readers? Was trust increased because multiple newsrooms worked together, or did it create a sense of a media conspiracy?

- What impact did the project have on the voting public? Did the project stop rumors before they propagated on the social web?

- How well did newsrooms work together? What are the challenges as well as the opportunities of collaborative journalism?

We are eager to see where this project resonated with readers and where it fell short, and will share that information with our Partners and the public. Once we review the findings from our first “living laboratory,” we will continue to refine CrossCheck and look forward to future collaborations in other countries, languages and topics.