We’ve all done it. Noticed that juicy, surprising, shocking story appear in our Facebook news feeds, and hit the ever-tempting Share button. Later (moments, hours, days, weeks) we realize, or someone informs us, that the story was a fake. Maybe we delete the post, maybe we call out the fake story in a comment, maybe we forget about it. Maybe we don’t even realize we’ve helped spread misinformation.

In what sometimes feels like a 24-hour, social media-driven, breaking news cycle, online fake news — sometimes humorous, sometimes political, sometimes personal — has a tendency to spread quickly across social networks. What role, then, should social media companies — in particular Facebook, Twitter, and YouTube — play in fighting viral misinformation?

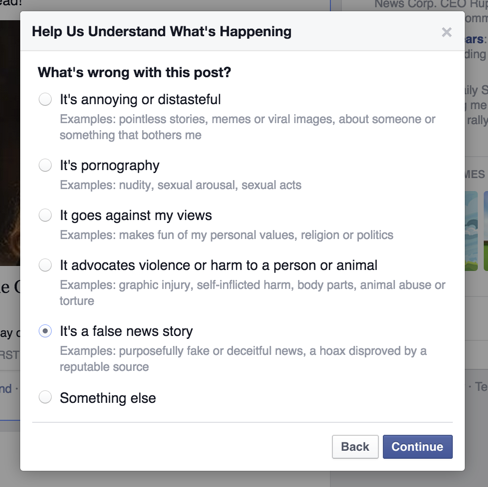

Facebook recently became the first company to implement an early solution directly addressing the problem: A new option to flag news feed items as “It’s a false news story”. If enough users flag a link as “false news” then it will appear less in the News Feed, and will show a warning: “Many people on Facebook have reported that this story contains false information.”

The problem(s)

It’s entirely laudable that Facebook is trying out this new feature: online misinformation can have serious, life-threatening offline consequences, especially in parts of the world where digital media literacy is low and information ecosystems are weak. The approach, however, merits some serious questions:

Is there any way to stop people gaming the system?

All systems can be gamed, but in the case of Facebook, we’ve already seen a steady stream of important groups and pages shut down — likely prompted by “community” reporting of those pages. While in theory this may seem a viable approach, in practice reporting is often used as a tactic by those who disagree with a certain view or ideology.

Most prominently this has been seen with Syrian opposition groups using Facebook to document and report on the ongoing civil war:

Facebook does not disclose information about who reported whom, making it impossible to confirm these theories. But the pro-Assad Syrian Electronic Army (SEA) […] has publicly gloated about this tactic. “We continue our reporting attacks,” read a typical post from December 9 on the SEA’s Facebook page.

It’s thus easy to envision activist groups flagging ‘false’ news stories en masse based not on their factual content, but on their desire to silence an opposing or dissenting voice. Once a flagged news story has disappeared from our feeds it’s unclear if there’s a way to challenge the ‘false’ assignment, or even a way to see a list of the stories that have been blocked.

Facebook’s new ‘False News Flag’, via The Next Web

Are ‘false news stories’ and stories which ‘contain false information’ the same thing?

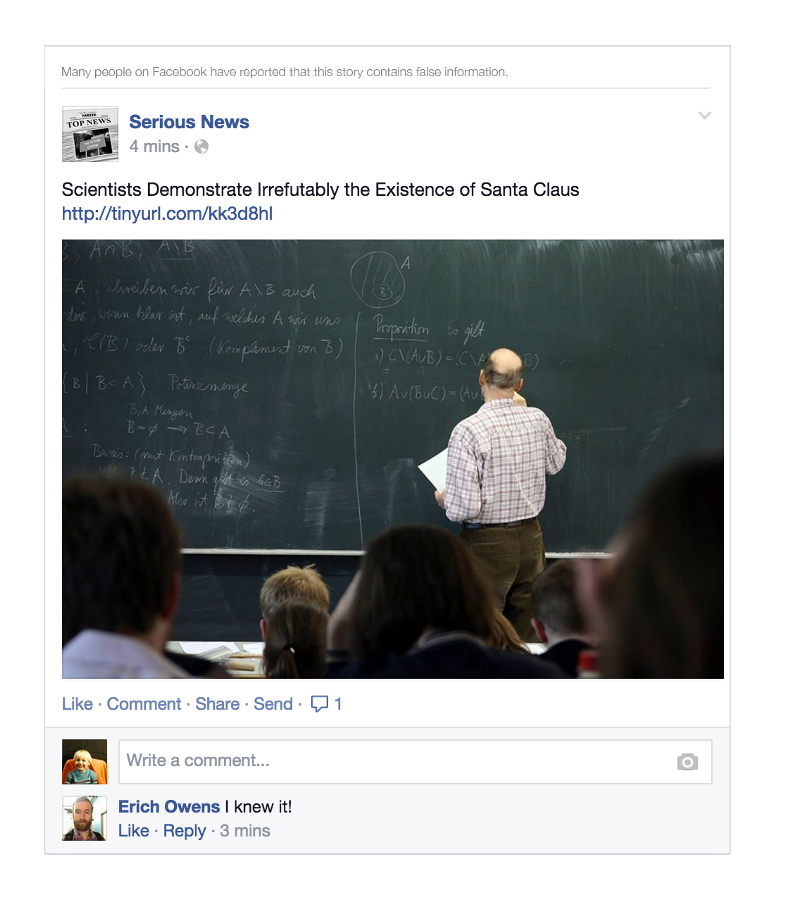

The protective disclaimer, “Many people on Facebook have reported that this story contains false information”, is one that leads to many questions that will seemingly be left unanswered: Which piece of information in the story is false? Is it that the key facts of the story are false, or that there’s an error in a background statistic? Is there strong evidence that proves that this is false? Who is claiming it is false?

Did you see the tiny disclaimer added up here? via The Next Web

Without knowing any of the above, it’s difficult to assess whether the disclaimer serves more to clarify or confuse. And if Facebook is trying to stop the spread of false news, why is this story even appearing in my feed?

Does this all mean that the stories that appear in my newsfeed are 100 per cent true?

The argument could be made that by being seen to intervene and removing ‘false news stories’ from the system, users will logically assume that stories that are not removed have a higher degree of credibility. This may not necessarily be the case, and in our world of ‘filter bubbles’ this tendency could help strengthen our confirmation bias and actually limit the flagging of false news stories.

3 ways Facebook can improve their false news feature

Our work on Checkdesk has led to many great conversations with lots of fascinating people about the challenges of viral misinformation online — likely some have already posted on this topic or may do so in the near future. In the meantime here are a few suggestions for other ways Facebook could help limit the spread of fake news stories.

1. Help spread the debunks

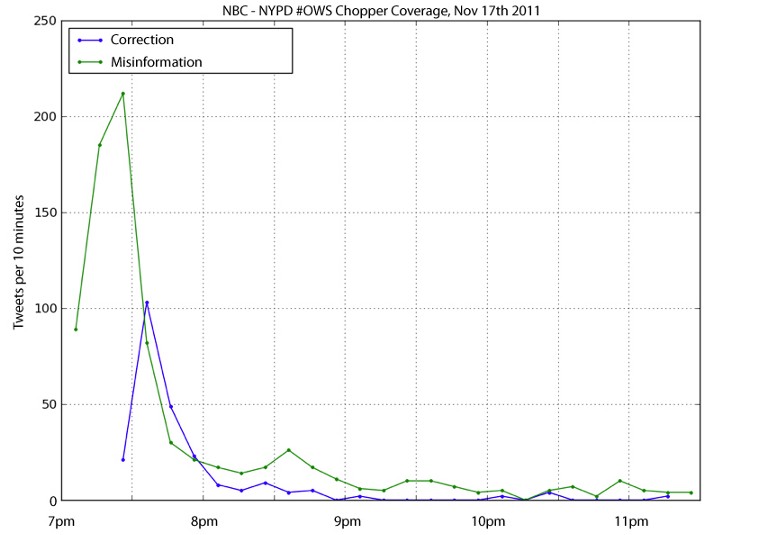

Research suggests that fake news typically spread faster and wider than articles debunking that same story. Craig Silverman — debunker extraordinaire and editor of the Verification Handbook — is also working on the excellent Emergent.info, which tracks the spread of online rumors and articles debunking the fakes. A quick glance at Emergent strongly suggests that fakes propagate faster and wider than corrections — hopefully Craig’s research will shed more light on that relationship.

Comparing propagation of an incorrect report to its correction by traffic, by Gilad Lotan, via Poynter

If Facebook is taking the step of filtering stories flagged as ‘fake’, then maybe it could also lend a hand in helping spread articles which directly debunks those fakes — perhaps even directly into the news feeds of people who shared the original fake stories.

2. Show the work

If media companies (Facebook included) want to help users make smart choices about the media they are consuming, then transparency in reporting is essential. Typical disclaimers (“This report could not be independently verified”, “Many people have flagged this story as containing false information”) are confusing because they are so incomplete: there’s no indication as to the extent of certainty or verification.

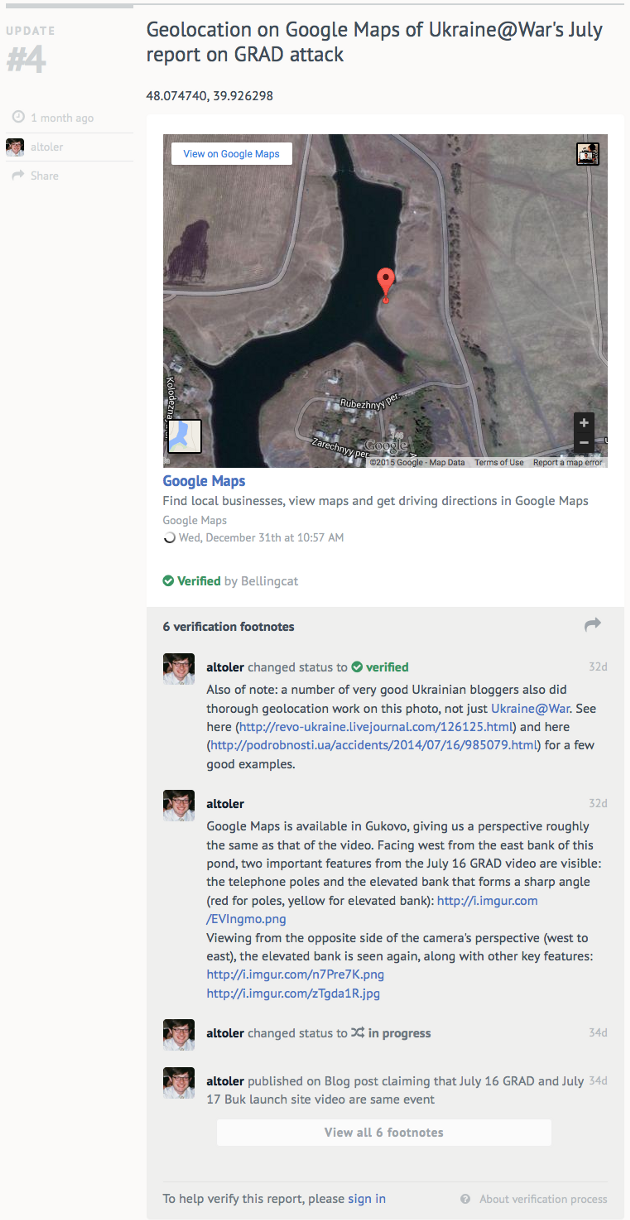

With Checkdesk, we try to solve this problem with verification footnotes (allowing journalists and community members to ask questions and add important contextual or corroborating information about a specific link) and statuses (“Verified”, “In Progress” etc). While this is deliberately a highly manual process, others have looked at ways of presenting “truthiness” based on algorithmic analysis, such as Rumor Gauge. Scalability is clearly an issue for Facebook, so a combination of algorithmic analysis and community-driven contextual information could be used to make the filtering process more transparent and accountable.

The aim should not be to simply filter out stories, but to give users the information they need to assess whether a story is real, fake or somewhere in between.

3. Support digital media literacy

It shouldn’t be a surprise that fake stories spread far and fast: if even journalists at world-class newsrooms are struggling to sort online fact from fiction, then it’s hard to know what to trust. Internet users around the world need better tools and information about the risks and dangers of misinformation, and basic knowledge about how to spot (or better, check) a fake story.

Progress has been made in this area in recent years, but more resources such as the Verification Handbook are needed (and in more languages!) to guide people to ask the right questions about the link they’re retweeting or the post they’re sharing.

Debunking projects such as DaBegad? do tremendous work in this space — their page has gained over 600,000 likes in just a few years, and reviews and debunks viral posts on a daily basis. Not only does this serve to counter the specific narratives being propagated for political ends in a deeply divided community in Egypt, but it also raises general awareness of the existence of dangerous misinformation online.

What are your thoughts on Facebook’s new feature? What suggestions do you have for helping make it more effective?

Tom Trewinnard from Meedan first published this on February 4, 2015 at Meedan updates on Medium. We have reproduced it here as part of The First Draft collection.