In 2016, the social networks, search engines, newsrooms and the public were not ready for election-related misinformation.

From the headlines pushed by Macedonian teenagers, to the Facebook ads published by Russia’s Internet Research Agency, to the automated and human coordinated networks pushing divisive hashtags, everyone was well and truly played.

Four years later, there is more awareness of the tactics used during the 2016 US presidential election, with technology companies putting some measures in place. Google and Facebook changed their policies, making it more difficult for fabricated “news” sites to monetize their content. Facebook has built an ad library so it’s easier to find out who is spending money on social and political advertising on the platform. Twitter has become more effective at taking down automated networks.

Despite those actions, those who want to push divisive and misleading content are devising and testing new techniques to stay a step ahead so they won’t be affected by the platforms’ changes.

One of the most worrying possibilities is that most of the disinformation will disappear into places that are harder to monitor, particularly Facebook Groups and closed messaging apps.

Facebook ads are still a concern. One of Facebook’s most powerful features is its advertising product. It allows the administrators of a Facebook Page to target a very specific subsection of people — women ages 32-42 who live in Raleigh-Durham, have children, have a graduate degree, are Jewish and like Elizabeth Warren’s Facebook Page, for example.

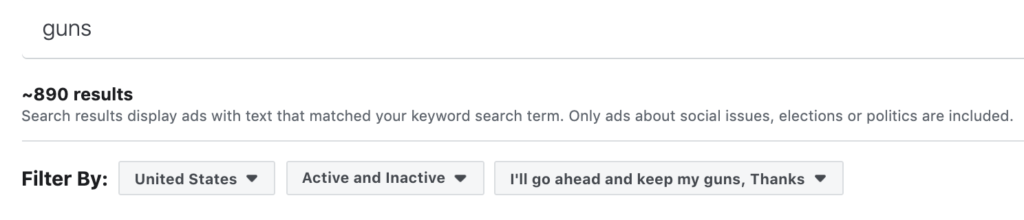

Facebook even allows ad buyers to test these advertisements in environments that allow them to fail privately. These “dark ads” let organizations target certain people, but they don’t sit on that organization’s main Facebook Page, thus making them difficult to track. Facebook’s Ad Library gives you some ability to look at the types of ads certain candidates are running, or to search around a keyword — “guns,” for example. We explain how to use the Ad Library later in this guide.

Why are these dark spaces so worrying?

In March 2019, Mark Zuckerberg announced Facebook’s pivot to privacy. This description reflects what had already started to happen. Over the past few years, people have moved away from spaces where they can be monitored and targeted, likely a result of learning what happens when you post in public spaces. Children grow up and complain that they never consented to those baby photos being searchable in Google. People lose their jobs after drunk or ill-considered tweets, or realize that law enforcement, insurance adjusters and border protection officers are watching what gets posted. Then there is the disturbing reality of online harassment, particularly targeting women and people of color.

In response, some people are making their Instagram profiles private. Others are reading the Facebook privacy tips and locking down the information available on their profiles, or practicing self-censorship on Twitter. In certain parts of the world, regulation aimed at punishing those who share false information might have a chilling effect on free speech, according to activists, further incentivizing a migration to closed apps.

This shift toward closed spaces is understandable, and we suspect historians will look back at the last ten years as a very strange and unusual period where people were actually happy broadcasting their activities and opinions. The pivot to privacy is really only a transition back to the norm, with conversations involving smaller groups of people, especially those with whom you have a higher level of trust or affinity.

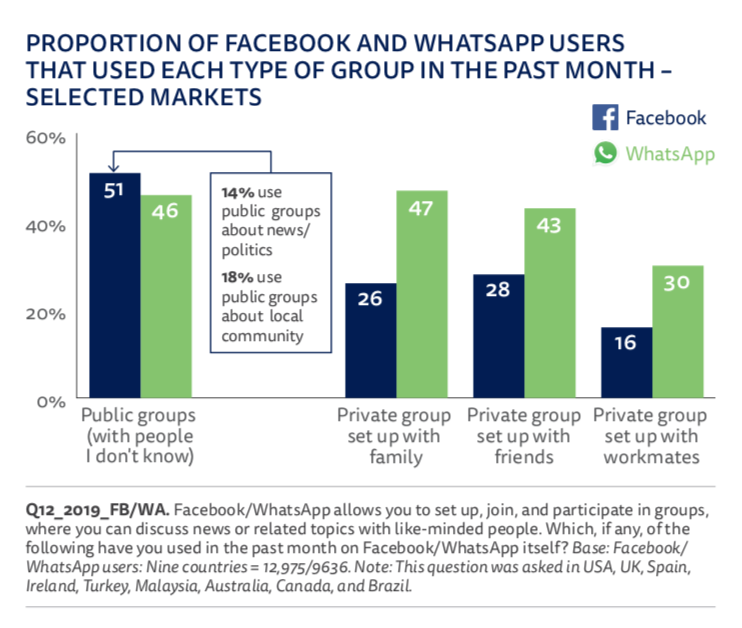

This graph from the 2019 Reuters Institute Digital News Report shows how many people rely on groups on Facebook and WhatsApp for news and politics.

How Facebook and WhatsApp users rely on groups for news and politics. Reuters Institute for the Study of Journalism.

For journalists attempting to understand, refute and slow the distribution of viral rumors and false information, however, this shift makes things very difficult.

When information travels on closed messaging apps, be it WhatsApp or Facebook Messenger, there is no provenance. There is no metadata. There is no way of knowing where the rumors started and how they traveled through the network.

Many of these spaces are encrypted. There’s no way of monitoring them with TweetDeck or CrowdTangle. There’s no advanced search for WhatsApp. While encryption is a positive thing, when it comes to tracking disinformation — particularly disinformation designed to be hidden, such as voter suppression campaigns — it starts to become worrying.

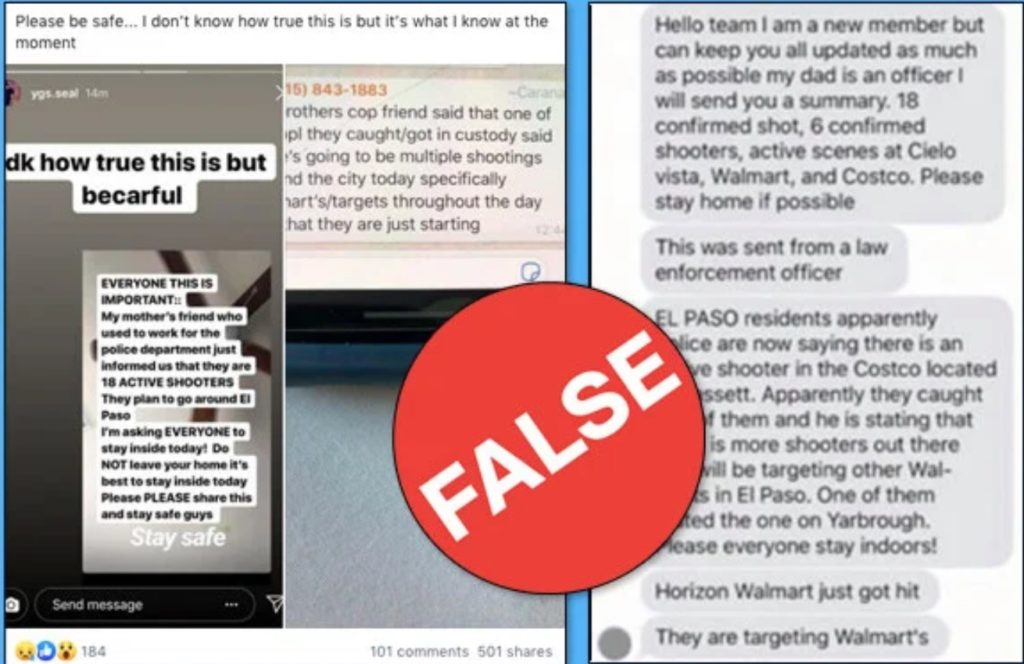

Jane Lytvynenko from BuzzFeed regularly tracks rumors and falsehoods during breaking news events. As she watched events unfold during the mass shootings in El Paso, Texas, and Dayton, Ohio, in mid-August 2019, she saw for the first time significant levels of problematic content circulating in closed spaces including Facebook Messenger, Telegram, Snapchat and Facebook Groups. She wrote about her experience for BuzzFeed.

Misinformation about the El Paso and Dayton shootings circulated in closed messaging spaces.

This shift in tactics creates new challenges — especially ethical ones — for journalists. How do you find these groups? Once you find them, should you join them? Should you be transparent about who you are when you join a group on a closed messaging app? Can you report on information that you’ve gleaned from these groups? Can you automate the process of collecting comments from these types of groups? We’ll tackle these questions and more throughout this guide.

Understanding ad libraries

The Facebook Ad Library allows you to investigate advertisements running across Facebook products. You don’t have to have a Facebook account — the library grants anyone the ability to see active and inactive ads related to any topic.

For journalists and researchers, the tool offers unprecedented scope for finding and monitoring information about political advertisements around the world, including who is paying for them.

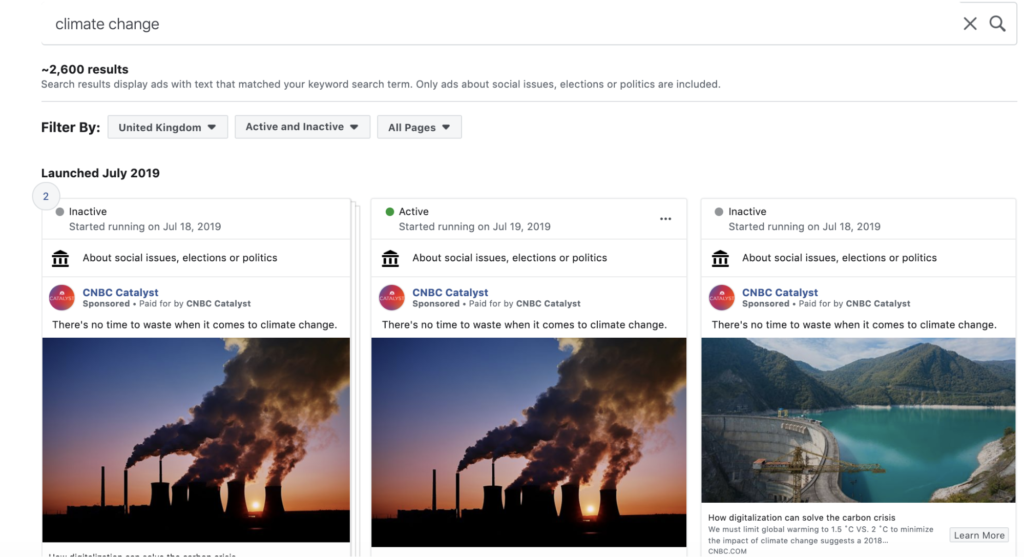

There are two main ways to search: “search all,” which lets you search by Facebook page name, and “issues, elections or politics,” which allows you to search by keyword. If you searched for “climate change,” for example, the library would return a list of all active and inactive ads that have run around the issue.

The Facebook Ad Library will show you a list of all active and inactive ads that have run around a particular topic. Screenshot by author.

Clicking on each post gives you more detailed information, including who funded the advertisement, where it was shown, what demographic is targeted and basic information on how much was spent on the ad.

You can combine your search with various filters to specify whether you want to look at ads across the globe or in a particular country, and whether you want active ads, inactive ads or both. You can also search for keywords and then filter the results by specific Facebook Pages.

For example, you could search for guns and then limit the results to the Page “I’ll go ahead and keep my guns, Thanks” to get insight into its advertising campaign and financing.

The Ad Library also allows you to limit search results by page. Screenshot by author.

The Facebook Ad Library Report lets you get a general overview of all Facebook ads running around social issues, elections and politics for different countries and date ranges. You can download these reports in CSV format for further analysis.

You can also access the Facebook Ad Library API for even more granular data or to build up your own database of Facebook advertisements. Facebook has published a guide with instructions on installation and use.

The library is not without its limitations. The API has been criticized for its bugginess, delivering incomplete data that might affect the reliability of your monitoring and research.

Also, make sure you turn off your ad blocker when using the library — it might affect your searches — and be skeptical if your search doesn’t return any results. If you try refreshing the page and performing the search again, you will likely get a new list of results.

Google ad transparency report

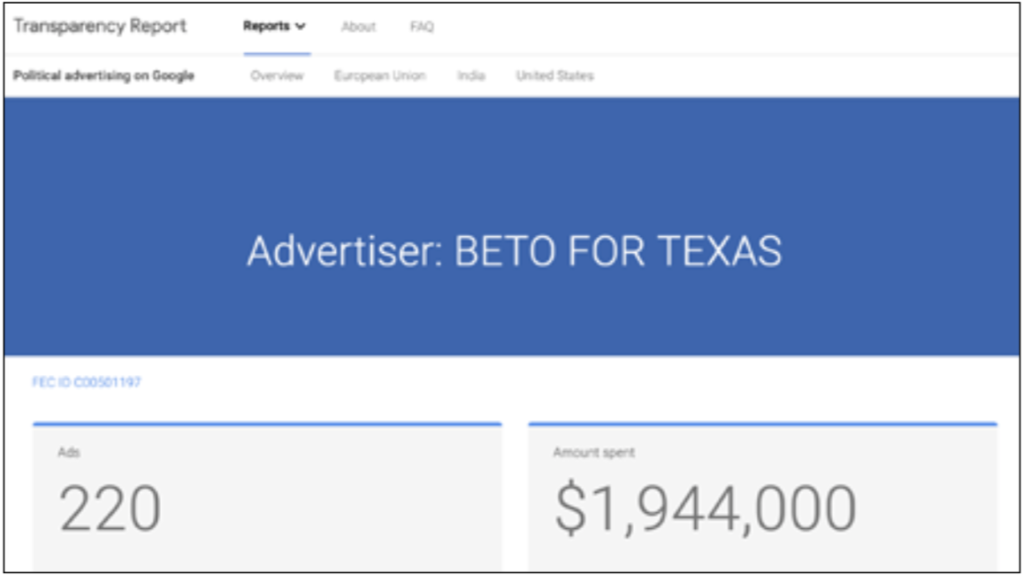

Google launched its Ad Transparency tool in August 2018. It doesn’t have quite the same functionality as Facebook, and right now it only works in the US, Europe, New Zealand and India, but it does hold ads on Google Ad networks, YouTube and other “partner networks.”

The database is updated weekly and contains information about “spending on ads related to elections that feature a candidate for elected office, a current officeholder, or political party in a parliamentary system,” according to Google.

The first thing you’re met with when clicking through to one of the regions is a map that breaks down the ad spend for each contested constituency: countries in the EU or states in the US and India, for example.

Scrolling down further shows the registered advertisers ranked by the amount they have spent and a library of all ads for the overall region. Users can explore these by date, ad spend, impressions and format.

Further down, it is possible to drill down into the top-spending political campaign organizations, to see how much money they’ve spent and what the ads look like.

Users can also search through the library for each region by candidate or keyword and download data in a CSV format to explore further.

Google’s Ad Transparency tool. Screenshot by author.

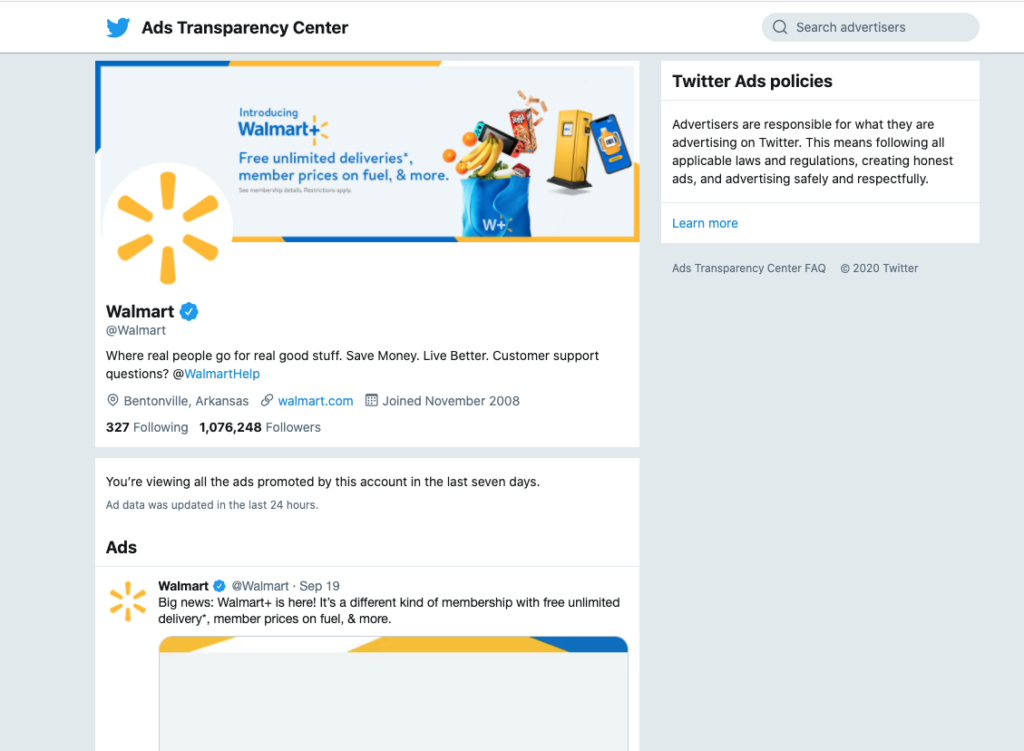

Twitter ads

In November 2019, Twitter chief executive Jack Dorsey announced that the platform would restrict political advertising around the world, including a ban on political parties, candidates for political office and elected or appointed officials running any ads at all. As of November 22, 2019, Twitter updated its content policy to forbid political advertising worldwide, and issue advertising in the United States only.

Twitter’s Ads Transparency Center , which was launched in the summer of 2018, still exists as a searchable database of promoted tweets and video ads currently running on Twitter. It allows you to see ads promoted by accounts around the world in the last seven days. Tucked away in the top right of the website is the Twitter Ads Transparency Center’s most powerful tool: a search bar that allows users to find any account and see the ads it has paid to promote in the last seven days.

Snapchat ads

It’s worth mentioning Snapchat, which appears to have tried to get out in front of any potential criticism by making a public library of political and “advocacy” advertisements.

The offering of the Snap Political Ads Library is basic but detailed. Users download a CSV of all such ads for 2018, 2019 or 2020 and explore the data to their heart’s content, looking at the organizations, money spent, impressions, messaging, demographics, links and imagery associated with each ad. So far for 2020, the spreadsheet includes more than 5,500 ads, and offers precise information missing from the other platforms discussed here.

Facebook groups

Types

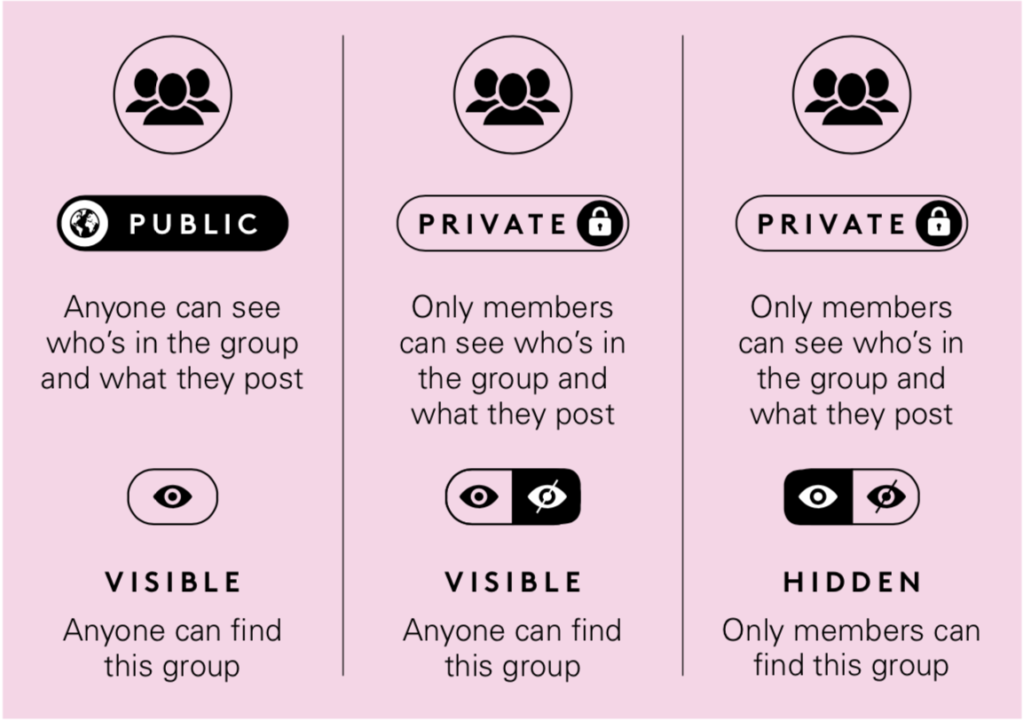

There are two types of Facebook groups: Public and Private. This controls who can join a specific Facebook group. As an added layer of security, groups can be hidden — which means that they can’t be found through the general search bar on Facebook.

The cheatsheet below helps explain the differences. Public and Private groups can be found in a Facebook search, but if it’s a hidden group, you have to ask to join and an administrator will approve or decline the request.

Mark Zuckerberg announced a refocus on Facebook groups in 2018. Screenshot by author.

Sometimes these groups will ask you specific questions about your views and opinions, or will ask you to agree to confidentiality or codes of conduct. (This can create ethical challenges for journalists, as discussed later in this guide).

Facebook groups: the three types. Screenshot by author.

How to search them

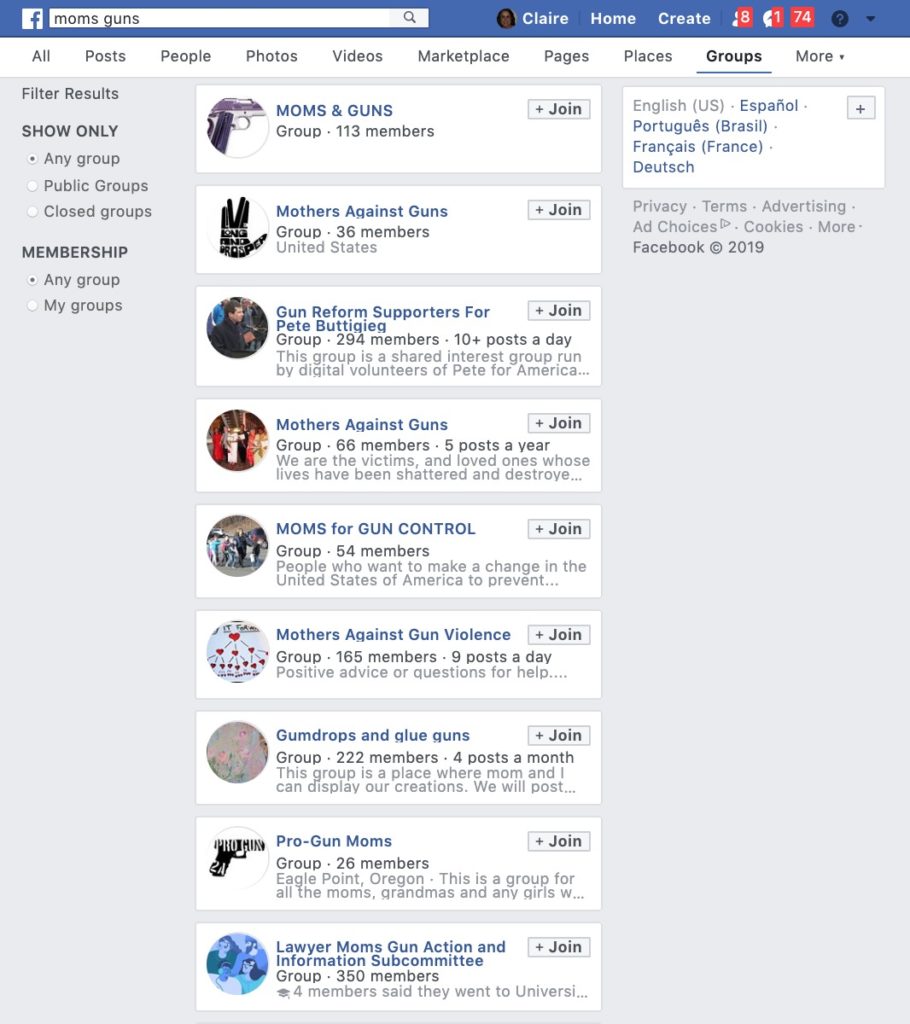

You can search for groups using the Facebook search. Below is a simple search connected to “Moms and Guns.” You can see in the results that Facebook reads “Moms” and pulls out results that include the word “mother.”

You can use Facebook’s native search bar to look for groups about a particular topic. Screenshot by author.

If you need to use more complex search operators, you can search in Google using something like “Moms AND guns” “el paso” site:facebook.com/groups

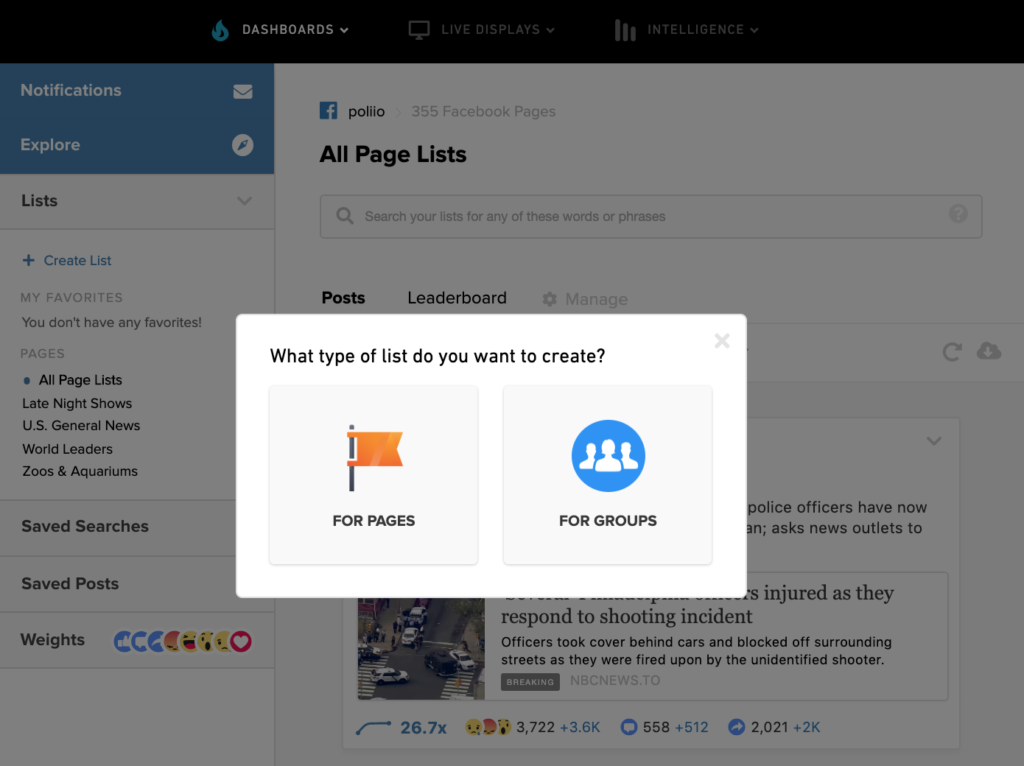

It’s hard to monitor Facebook groups easily unless you use CrowdTangle, the platform Facebook made free for journalists in 2017.

In CrowdTangle you can set up lists of public Facebook groups and receive regular updates on the more popular posts in them. CrowdTangle does not contain any public information, however, so private or hidden groups are only accessible on the main Facebook platform.

See First Draft’s Essential Guide to Newsgathering and Monitoring on the Social Web for more on this.

You can use CrowdTangle to set up lists of Facebook groups to monitor. Screenshot by author.

Closed messaging apps

The use of closed messaging apps is significant globally. There are over 1.5 billion users of WhatsApp and 1.3 billion users of Facebook Messenger. There are apps that are native to certain countries, like KakaoTalk in Korea, and WeChat in China. There are apps that are more popular in certain countries even though they’re widely available, like Telegram in Iran, Viber in Myanmar and LINE in Japan.

However, the level of encryption differs. For example, WhatsApp is encrypted by default, whereas Telegram provides end-to-end encryption for voice calls and optional end-to-end encrypted “secret” chats between two online users, but not yet for groups or channels.

If you really want to keep yourself and your sources protected, Signal is the app most security specialists recommend for journalists, as it includes encryption and the option for messages to self-destruct after a designated period of time.

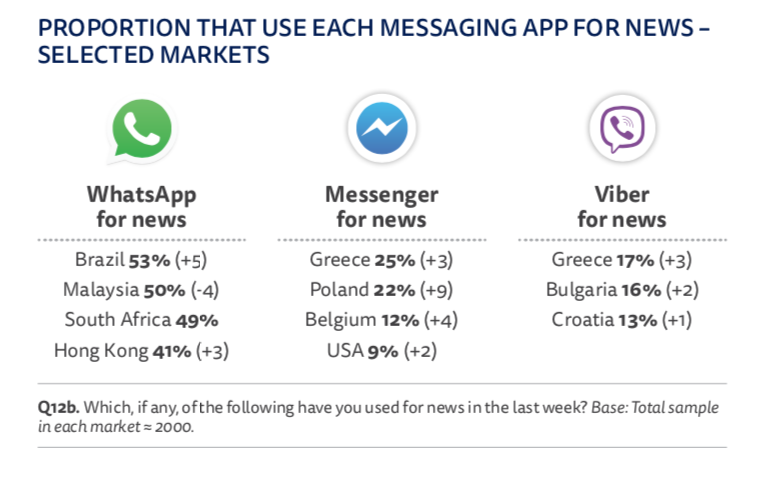

It’s easy to dismiss closed messaging apps as just another form of SMS, but it’s important to understand these spaces are used in very different ways by different communities. For example, the 2019 Reuters Institute Digital News Report found that approximately half of respondents in places like Brazil, Malaysia and South Africa use WhatsApp to consume news.

Popularity of closed messaging apps around the world. Reuters Institute for the Study of Journalism.

WhatsApp is the most popular messaging app globally. With 1.5 billion users, Facebook-owned WhatsApp is already the main messaging app in countries including Spain, Brazil and India. The addition of the app’s group chat function has revolutionized mobile communication, quickly becoming one of the most popular tools to exchange information around protests, events and elections.

The closed nature of these groups along with WhatsApp’s end-to-end encryption has thwarted many efforts by journalists and researchers to monitor the messaging service. However, in the past few years, several ways of tracking the service have emerged.

You can manually look for public WhatsApp groups on platforms like Google, Facebook, Twitter and Reddit, using the search term chat.whatsapp.com. You can join each group and monitor them individually. How you use information gleaned from these groups requires ethical considerations (see below).

There is a way to computationally monitor these groups, but it breaks WhatsApp’s Terms of Service. You can scrape the web for publicly open WhatsApp groups related to your country or your beat. This technique has been used mainly by researchers and started debates about the ethics of this approach.

The scraping and decryption methods outlined here raise serious ethical questions, specifically around privacy violations. You and your organization should consider these questions with immense care, thought and planning before using these techniques.

The simplest way of monitoring and researching WhatsApp for particular information is by establishing a tip line around particular topics, which can be sent to a phone in the newsroom. Depending on the quantity of tips and information, you might think about integrating it with Zendesk, a pay service that allows you more flexibility in how you organize these tips.

In Comprova, First Draft’s collaborative journalism project around the 2018 Brazilian election, organizers created a central tip line 12 weeks ahead of polling day, and the project received over 100,000 messages from the public.

Telegram

Telegram has similar functionality to WhatsApp, in that there are encrypted one-to-one chats and groups, but whereas WhatsApp limits groups to 256 members, Telegram’s basic groups hold 200. Supergroups on Telegram can hold 100,000 people.

The main difference from WhatsApp is that Telegram also has a functionality called channels, which allow a person or organization to “broadcast” to an unlimited number

of subscribers.

Telegram has gained a reputation as a favored messaging platform of extremists. Once a home for supporters of so-called Islamic State, it has also seen an influx of other extremists as the major platforms crack down on activity that breaches their community guidelines.

Discord

Discord is a real-time messaging app popular with gamers. Over the last couple of years, however, it has developed a reputation as a hub of conversation for political and social issues. During the #MacronLeak it was possible to find people talking about tactics and techniques (as outlined in this post by Ben Decker and Padraic Ryan at the time). There are also connections between those who use anonymous forums like Reddit and 4chan, and you can find short links to Discord communities on these sites.

The service is organized by servers, also known as guilds. During the lead-up to the 2016 US midterms it was possible to follow conversations where people were coordinating in servers around particular campaigns or candidates.

In his 2018 guide for journalists reporting from closed and semi-closed spaces, former BBC social media editor Mark Frankel describes Discord this way:

“For those less comfortable about talking in a fully open or public online forum, Discord provides an alternative outlet. Through my daily searches in different servers, I found that many individuals would share links to documents I hadn’t seen on public websites and spoke freely about a number of subjects, from the Trump administration’s attitude to child migrants to Supreme Court judgments and local gubernatorial races. In many ways, the platform hearkens back to those early days of the social web where largely anonymous groups hung out on MySpace, AOL or Yahoo.”

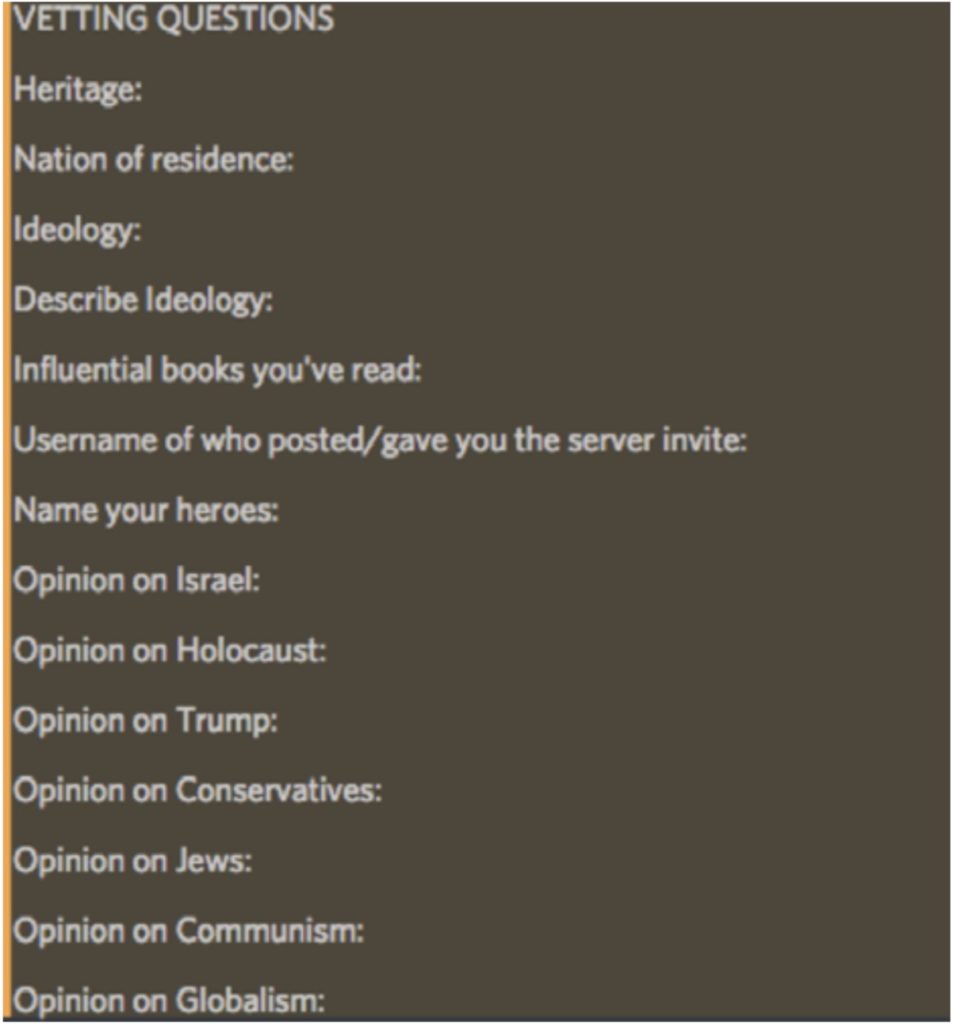

Some servers are open and anyone can join. Others require you to “prove” your identity by linking to other digital profiles and will ask questions before letting you in, similar to some Facebook groups.

A screenshot included in Frankel’s guide gives you an example of one of these vetting questionnaires.

Vetting questions on Discord. Screenshot by Mark Frankel for Nieman Lab.

We recommend that journalists who want to spend time on Discord use a VPN. We would also recommend having a conversation with your editor ahead of time about some of the challenges posed by being on the platform. While it is possible to lurk in these spaces, it’s necessary to think about the potential repercussions of publishing information sourced from these closed apps. Please see the ethical considerations section at the end of this book for more.

Two guides you may find useful are “Secure Your Chats” from Net Alert and 2015’s Guide to Chat Apps by Trushar Barot and Eytan Oren.

Ethical considerations

Whether you are looking to understand the ideologies of potentially hostile groups or to write a human interest story about a traditionally under-covered community, entering into closed groups and messaging apps presents ethical, security and possibly even legal challenges.

This ABC Australia write-up on the experience of a woman whose comment in a private Facebook group was amplified by the media is well worth a read, as a reminder of how small decisions by journalists can have a massive impact on those whose words are used.

Before you start your reporting, carefully go over your organization’s existing policy about newsgathering in closed online spaces with your editor, the ethics and standards department and the legal team. If your newsroom does not have such a policy, consult with these same parties about the best way to go about your newsgathering.

The first question to ask:

- Are there ways of obtaining the information you are seeking without entering into closed online spaces?

Privacy vs. public interest

If the answer is no, we recommend moving on to the following questions. They weigh privacy and potential harm against public interest, and encourage you to think about proportionality:

- What do you have to obtain from joining this group —sources, tips or background knowledge to inform your reporting? Or is the existence and content of the group itself the focus of your intended story?

Is this a group that would expect lurkers? Would members reasonably expect conversations and other content from these groups to be made public?

- What is the size of each closed group you are planning on entering, and how does that affect the expectation of privacy for each group?

- Would your writing a story expose group members to negative consequences?

- What is the public interest in your potential story?

- Are you planning on entering multiple groups? What is the minimum number of closed spaces you can enter into to find the information you need?

Transparency vs security

Next, consider whether you will use your true identity when entering the closed group, and whether you will affirmatively disclose your identity or merely refrain from concealing it.

Making these decisions responsibly requires both an understanding of the group you are entering and an understanding of your own identity in relation to the group, weighing transparency against security:

- What is the purpose of this group? Is the group likely to be hostile, and how would group members react to a reporter in their midst? Entering a closed group that advocates extremist ideologies, for example, may lead to a different disclosure decision than entering a WhatsApp conversation consisting of local parents or a group of employees looking to unionize.

- Is your presence in the group, using your real identity, likely to draw unwanted attention? Journalists of color and women, for example, may face additional security concerns when entering into certain potentially hostile groups, which may lead to a different disclosure decision.

- If you decide to enter the group using your real identity, to whom will you disclose this information? Will you disclose it to the group administrator, or to the whole group?

- When will you disclose your identity? Will you disclose it when you first enter the group, when you find something useful in the group you’d like to include in your reporting, when you have completed newsgathering, or when your story is published?

- Will you also disclose your reasons for being in this group?

- If the group requires you to answer certain questions before admission, will you answer these questions honestly?

Further consideration

Additionally, consider before embarking on this kind of reporting:

- Whether there are explicit confidentiality clauses in the community guidelines of the groups.

- How you are going to describe the methods of newsgathering in the resulting story.

- Whether you will go back into the group after the story’s publication and share the information you have learned.

Whether you use your true identity or an alias, it is absolutely critical to discuss and implement digital security measures —particularly when newsgathering in potentially hostile communities. The Committee to Protect Journalists’ Digital Safety Kit may be a useful starting point.

For an in-depth look into the ethical questions around entering non-hostile communities, we recommend Mark Frankel’s piece on the promises and pitfalls of reporting within chat apps and other semi-open platforms.

Conclusion

Information is moving into the dark, and we now see the vast amount of information disorder in closed and semi-closed spaces. As monitoring capabilities grow in sophistication, those trying to spread disinformation will migrate to places where their tactics are harder to find and track.

Monitoring these spaces will be labor-intensive and require journalists to spend time locating and observing these places. It will also necessitate an industrywide discussion about the ethics of this type of work.

Tip lines are one recommended approach to monitoring closed online spaces. This method requires newsrooms to build trust with their communities, and specifically audiences that are more likely to be targeted by coordinated campaigns of disinformation and voter suppression. During the lead-up to elections, it’s vital that newsrooms think about ways to partner with community and grassroots groups, religious groups and libraries, in order to track what these communities see in Facebook ads, Facebook groups, WhatsApp and Messenger groups.

Just as newsrooms had to prepare for the age of social media when tips, stories and sources suddenly became available in real time, we now have to prepare for the next era, when poor- quality information — rumors, hoaxes and conspiracies — drops out of sight, away from those who can rebut and debunk.

This article is the most most up-to-date version of the Essential Guide. Click here to download a PDF version of this guide. Last updated November 2019.

About the authors

Carlotta Dotto is a research reporter at First Draft, specializing in data-led investigations into global information disorder and coordinated networks of amplification. She previously worked with The Times’ data team and la Repubblica’s Visual Lab, and written for a number of publications including The Guardian, the BBC and New Internationalist.

Rory Smith is research manager at First Draft, where he researches and writes about information disorder. Before joining First Draft, Rory worked for CNN, Vox, Vice and Truthout, covering topics from immigration and food policy to politics and organized crime.

Claire Wardle currently leads the strategic direction and research for First Draft. In 2017 she co-authored the seminal report Information Disorder: An Interdisciplinary Framework for Research and Policy for the Council of Europe. Prior to that she was a Fellow at the Shorenstein Center for Media, Politics and Public Policy at Harvard’s Kennedy School, Research Director at the Tow Center for Digital Journalism at Columbia University Graduate School of Journalism, and head of social media for the United Nations Refugee Agency. She was also the project lead for the BBC Academy in 2009, where she designed a comprehensive training program for social media verification for BBC News that was rolled out across the organization. She holds a PhD in Communication from the University of Pennsylvania.