As the network of conspiracy theories coalescing under the QAnon umbrella became increasingly visible this year, social networks have tightened their moderation policies to curtail QAnon supporters’ ability to organize. Promoters of QAnon-linked conspiracy theories have adapted to use visuals and careful wording to evade moderators, posing a detection challenge for platforms.

In July, citing the potential offline harm that online QAnon activity could cause, Twitter pledged to permanently suspend accounts that tweeted about QAnon and violated the platform’s multi-account, abuse or suspension evasion policies. Facebook updated its policies in August to include QAnon under its Dangerous Individuals and Organizations policy, banning Groups, Pages and Instagram accounts it deemed “tied to” QAnon. In October, YouTube announced its own crackdown on conspiracy theory material used to justify violence, such as QAnon, while Facebook committed to restricting the spread of the “save our children” hashtag used in conjunction with QAnon content.

The platforms’ measures appear to have significantly limited the reach of QAnon-promoting accounts, Pages and Groups: Shortly after its July announcement, it was reported that Twitter had suspended at least 7,000 accounts, while Facebook stated in October that it had removed around 1,700 Pages, 5,600 Groups and 18,700 Instagram accounts. Ever-changing tactics, however, mean QAnon content perseveres on the platforms through memes, screenshots, videos and other methods.

Memes galore

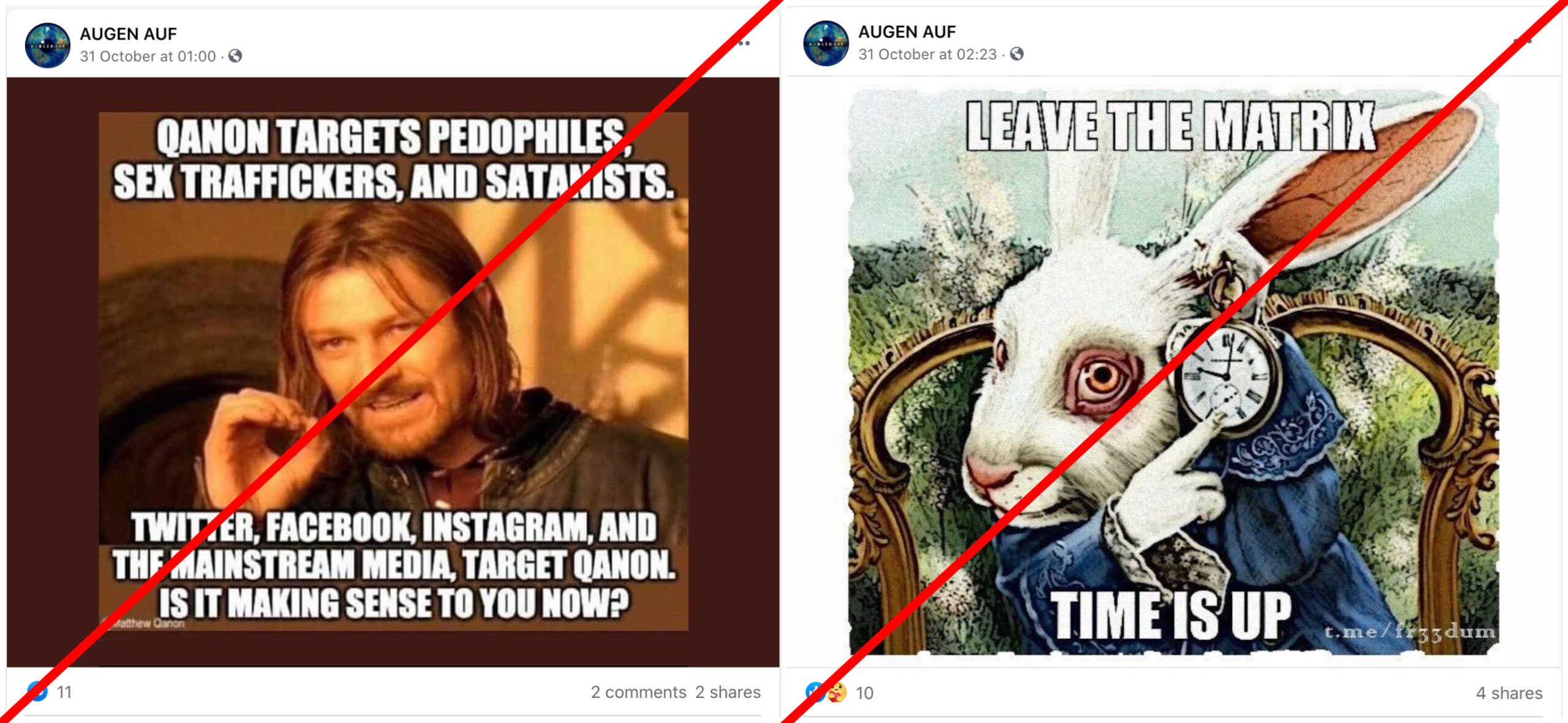

German Facebook Page “AUGEN AUF” (“eyes open”), which has more than 61,000 followers, posts conspiracy theory content, including memes that contain QAnon claims or are reshared from other Pages or accounts frequently posting QAnon material. In one post, the Page shared an image of a white rabbit with the text “leave the matrix, time is up,” bearing the name of a German-language Telegram channel that frequently shares QAnon content. In another post, a “Lord of the Rings” meme attacks platform bans of the conspiracy theory.

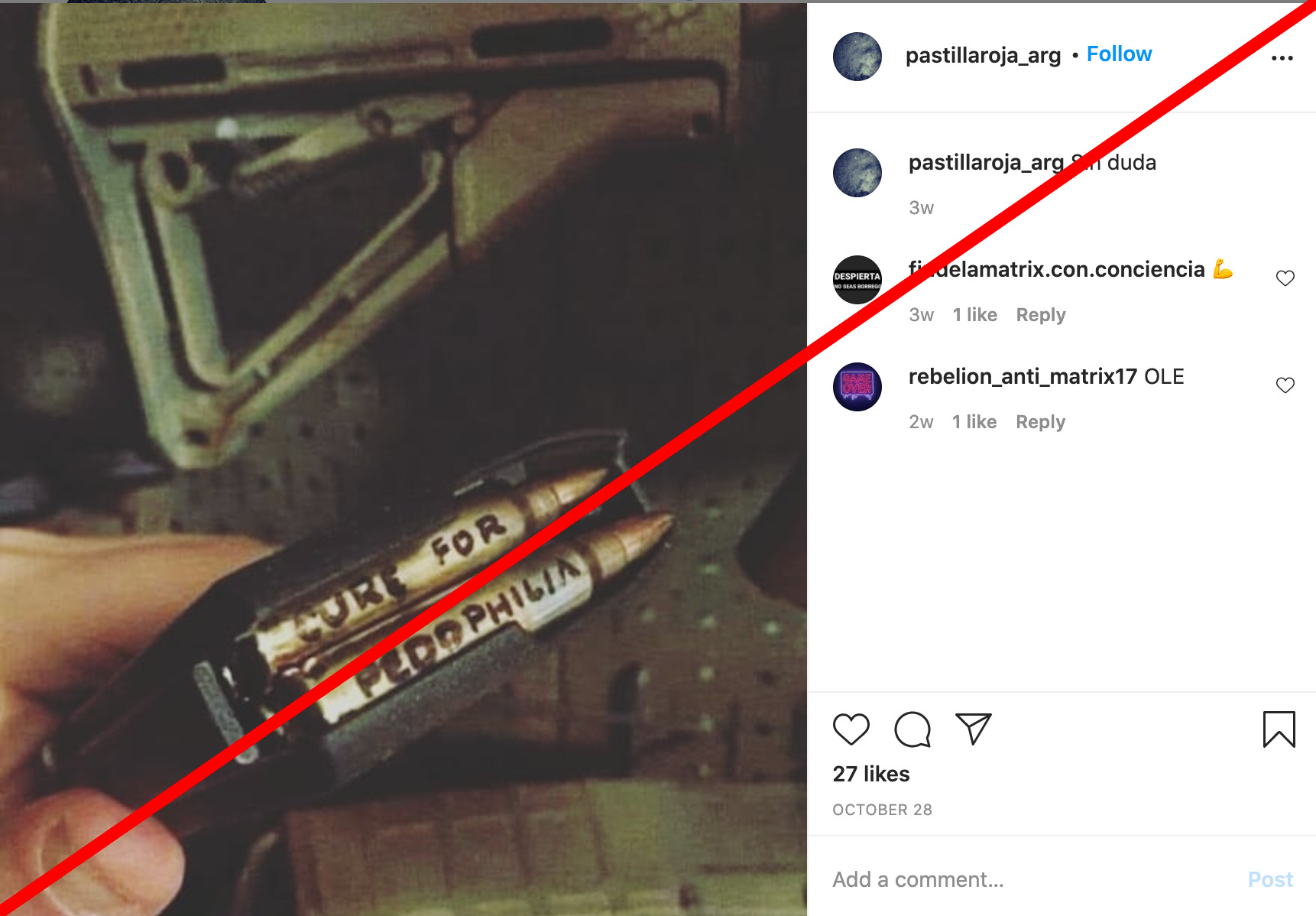

On Instagram, a Spanish-language account whose bio mentions the “New World Order” spreads QAnon visuals, including contradictory references to violence. One picture shows “cure for pedophilia” written on two bullets in a rifle magazine, while another image directly supporting the QAnon movement features text outrightly denying it supports violence and ends with the QAnon-associated phrase “WWG1WGA” (“Where we go one we go all”).

Accounts posting QAnon memes and other visuals often do not include captions with the images, making these posts challenging to surface using only a text-based search for QAnon keywords. Facebook has introduced an image option to its CrowdTangle Search tool, making it possible to search for keywords and phrases within images that have been posted to public Pages and Groups. Asked how the platform sweeps images as part of its detection efforts, a Facebook spokesperson told First Draft that it uses a combination of AI and human review across text, image and video posts to enforce its policies.

Screenshots of banned accounts, Pages and Groups

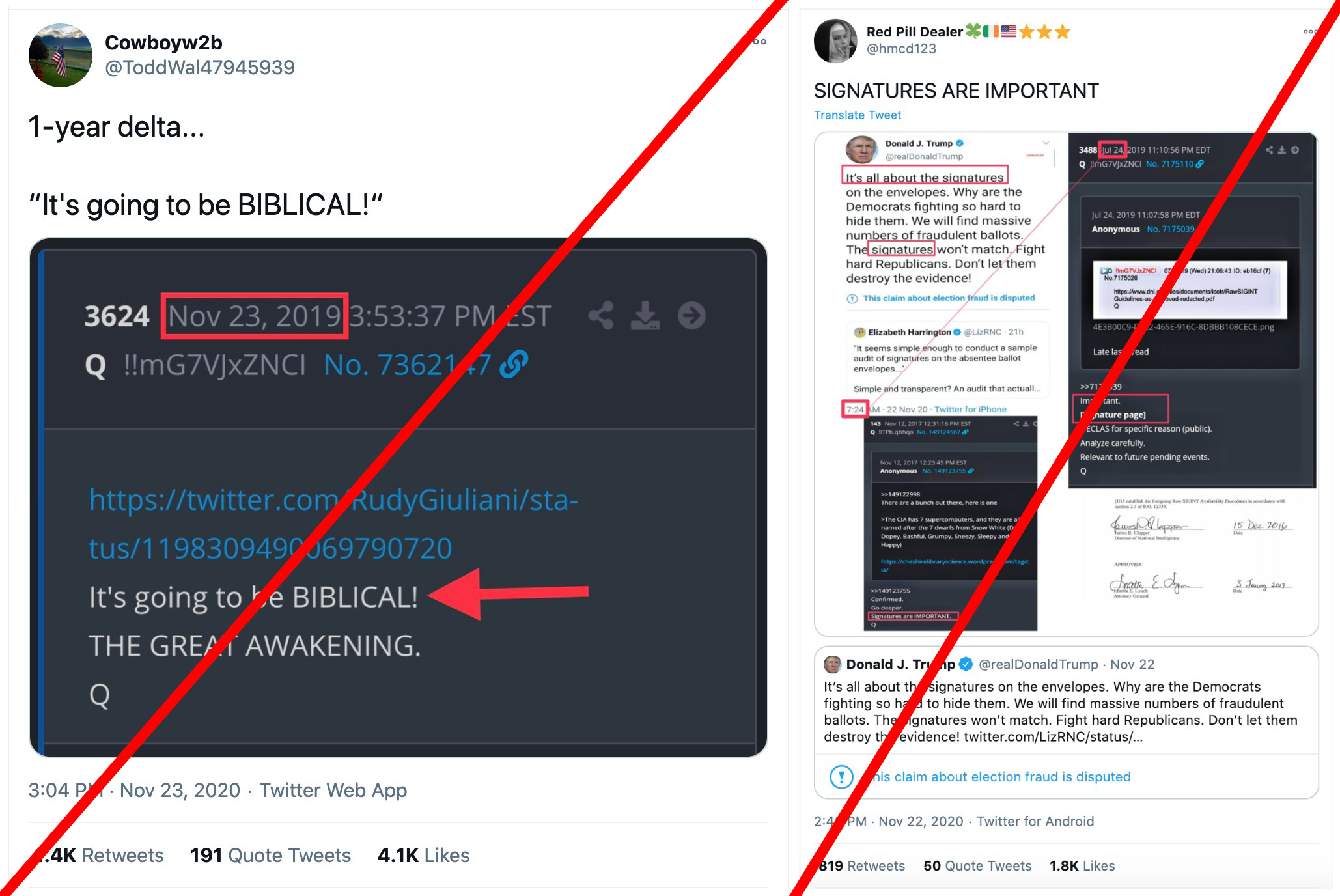

On Twitter, the accounts posting QAnon visuals despite Twitter’s crackdown on the community appear to be maintaining their influence and attracting high levels of engagement with other users. Posts allegedly from “Q,” taken from fringe message board 8kun, have been shared on the platform in the form of screenshots, with some attracting thousands of likes and shares.

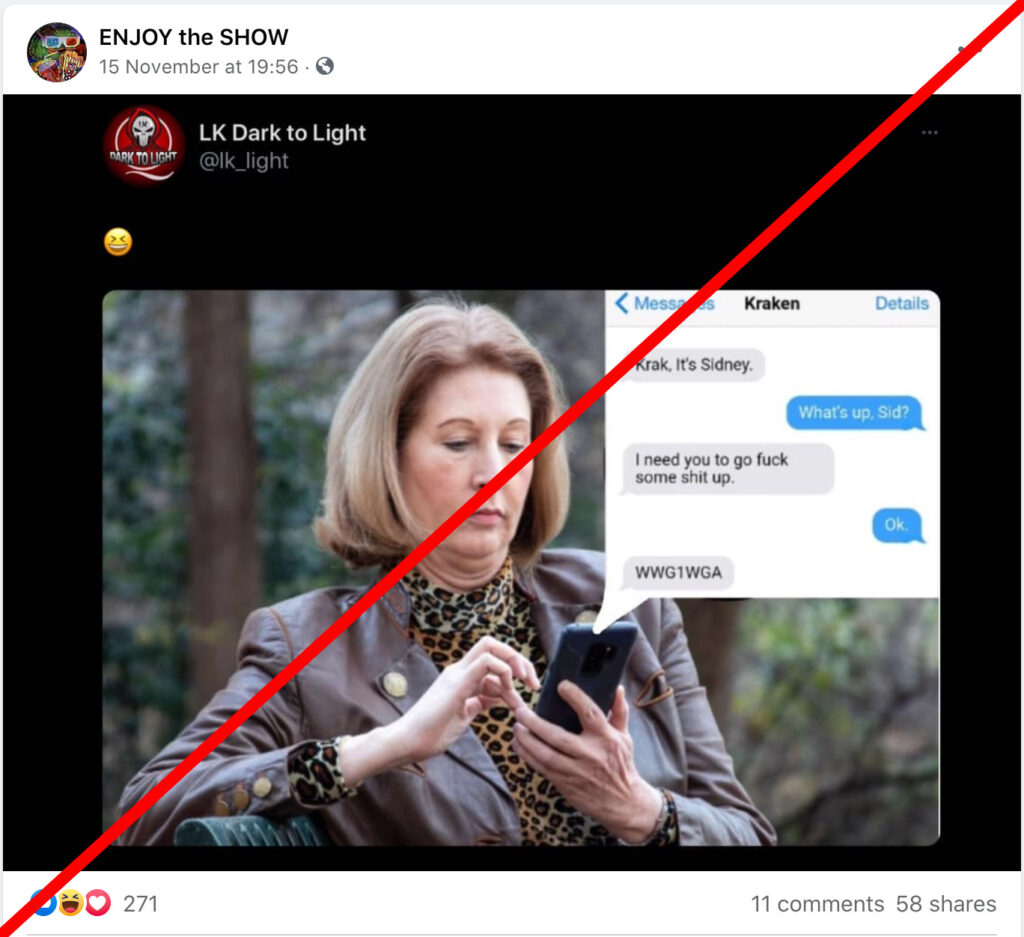

Even after Facebook’s mass takedown of Pages “representing” or “tied to” QAnon, content from removed Pages has a way of reappearing in screenshots posted to still-active Pages. The Facebook Page “ENJOY THE SHOW,” which has more than 12,600 followers and regularly posts pro-Trump memes and QAnon content, recently uploaded a screenshot of the removed Page “LK Dark To Light” sharing a QAnon meme.

Videos

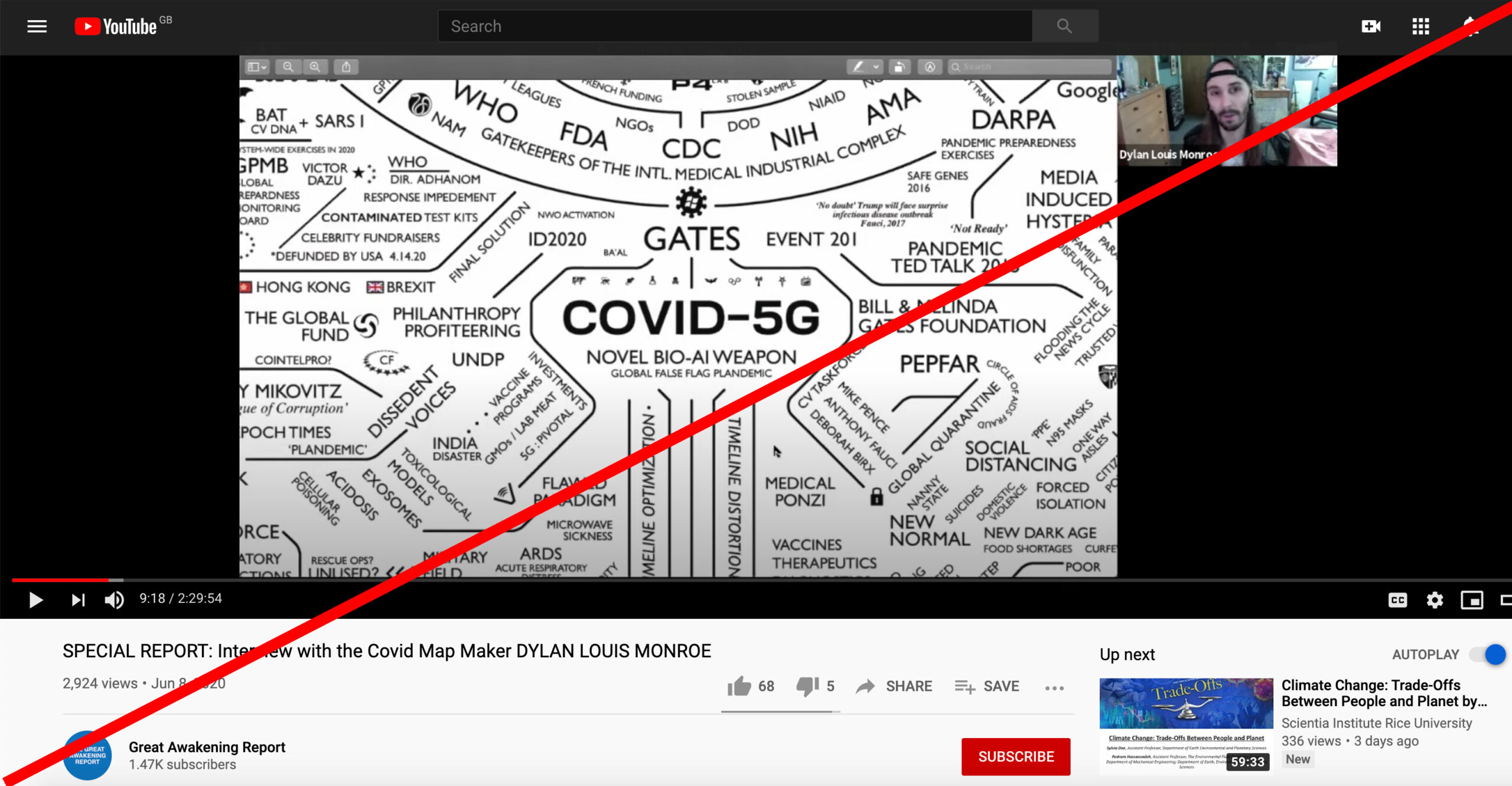

Videos are another method through which QAnon supporters continue to spread their claims. On YouTube, First Draft found videos with seemingly innocuous descriptions and titles containing claims supporting the conspiracy theory. The Great Awakening Report YouTube channel, for example, makes little mention of QAnon in titles, but its videos routinely promote QAnon claims.

One clip featuring an interview with Dylan Louis Monroe, an artist and administrator of the Deep State Mapping Project (whose Instagram account has been removed), contains a deluge of false assertions and conspiracy theories around QAnon, 5G and the coronavirus, incorporating images of “conspiracy theory maps.” Monroe also regularly posts QAnon conspiracy theories via his Twitter account.

Key letters and phrases

Aside from visuals, some supporters have evaded moderation by using key letters and phrases. Some Facebook Groups and Pages subtly weave the letter “Q” into otherwise generic phrases or words to signal support for the conspiracy theory network. For example, the handle of the “ENJOY THE SHOW” Facebook Page: @ENJQY1.

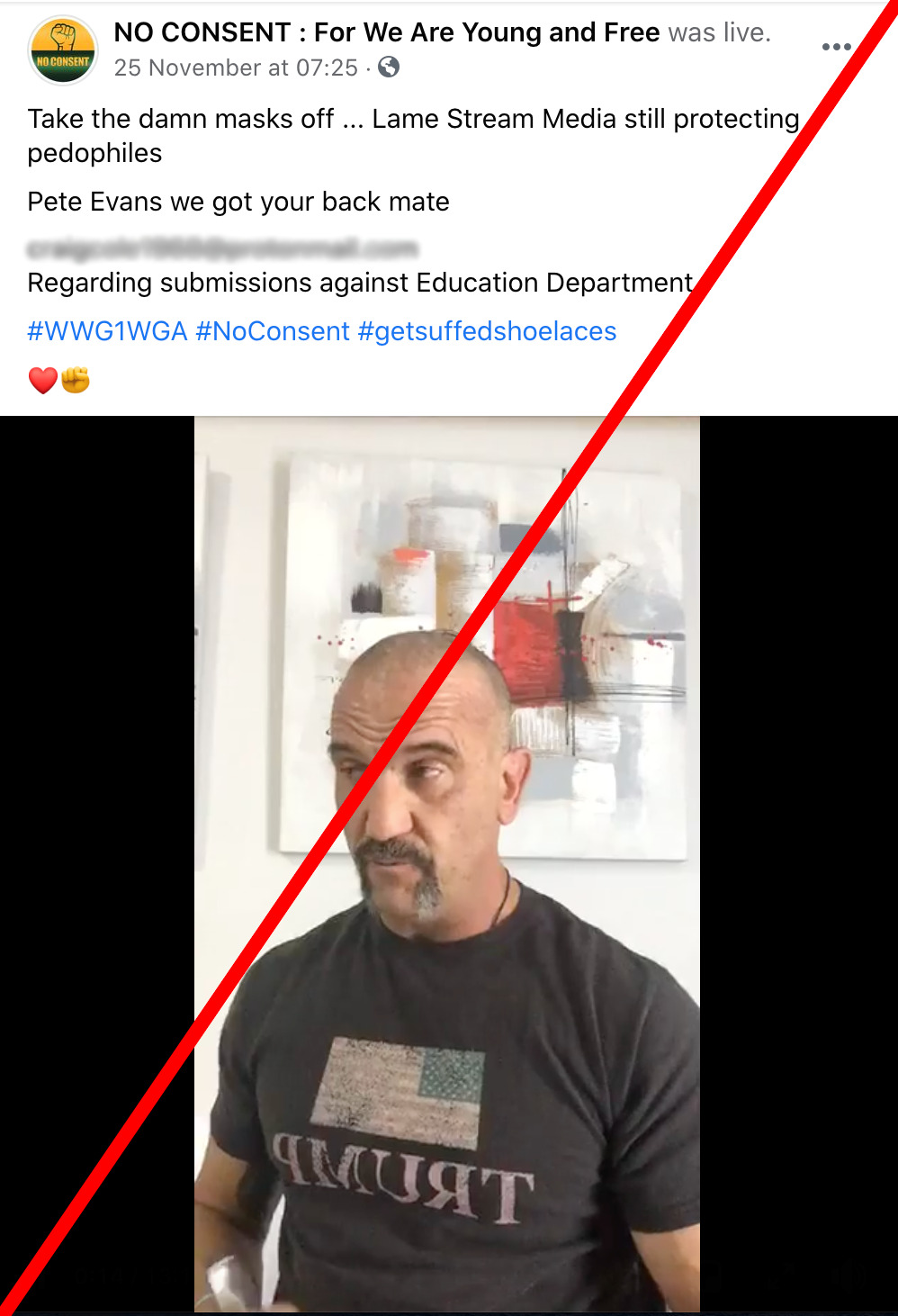

Other members of the community have pledged to avoid “Q” altogether, instead opting for keywords such as “17” or “17anon” (“Q” being the 17th letter of the alphabet). One Australian Facebook Page with 10,000 followers posts videos feeding QAnon ideas and includes “WWG1WGA” in nearly every post.

Facing the next challenge

Many of the major social platforms were slow to quash QAnon in its infancy, allowing it to gain a large online following, cross-pollinate with other conspiracy theory communities and spread across borders. (One exception may be Reddit, which banned the QAnon subreddit two years ago, a possible explanation for QAnon’s lack of meaningful presence on the platform.)

Platforms such as Twitter, Facebook and YouTube have since realized the potential harm posed by QAnon-promoting accounts and moved to limit their organizational capabilities. Users searching for overtly “QAnon” Groups, Pages, accounts and hashtags in order to find like-minded Q supporters will find that this is no longer as easy as it was several months ago.

But in the ongoing cat-and-mouse game, the next frontier in QAnon moderation may require further investment in detecting QAnon-related images, videos and keywords. Visuals in particular may pose detection challenges at scale, and while Facebook has indicated that its moderation efforts extend to images and video, it has not released details on how these efforts impact visual content specifically. First Draft also reached out to Twitter and YouTube for comment, but has not yet received a response.

Stamping out QAnon content on these sites entirely may be an impossibility now, as influencers within the community have demonstrated they can adapt to find new ways onto people’s feeds. But platforms can attempt to limit their impact by staying on top of evolving tactics, thinking ahead and outside the box.

Stay up to date with First Draft’s work by becoming a subscriber and following us on Facebook and Twitter.