About this course

Back in March, at the start of the pandemic, we created ‘Covering coronavirus: An online course for journalists’ to help journalists reporting on Covid-19 to navigate the emerging infodemic. A lot of has changed since then, but the fundamentals of the challenge remain the same, and for any journalist working in this age of increased polarization and disinformation, there are some things you just need to know.

We know that during critical moments such as these time is short. So we have boiled down the fundamentals and key takeaways from that full length course, and created an abridged version that’s short enough to read through in a lunch break or too. Consider this your essential guide to reporting online.

The course is designed around three topics, with select learning modules made up of text articles and videos contained within each:

1) MONITORING: Whether you’re looking to find accurate information about the virus, trying to track the latest conspiracies and hoaxes, or searching for people’s personal experiences, this section will help you keep up with the conversation.

2) REPORTING: This section will explain the importance of deciding when to report (and not to report), give advice on wording headlines and show you why filling ‘data voids’ is important in answering the questions posed by your audience.

3) VERIFICATION: This section is designed to help you brush up your skills on verifying images and investigating someone’s digital footprint. Check out our case study that walks you through how we verified a video we found on social media.

There is also a glossary and reading list which you can turn to at any point in the course.

We hope you find this fundamentals course to be a valuable guide to producing credible coverage during the Coronavirus crisis and beyond.

Monitoring

Whether you’re looking to find accurate information about the virus, trying to track the latest conspiracies and hoaxes, or searching for people’s personal experiences, monitoring lets you keep up with the conversation. Using social listening platforms, you can set up searches in advance and track online stories over time, so you are always one step ahead of the curve.

In this section we’ll help you know what to look for by explaining the common characteristics, templates and patterns of coronavirus misinformation. We’ll also discuss the ethics of monitoring private messaging groups and channels. Finally, we’ll address the need for emotional skepticism while monitoring for coronavirus information.

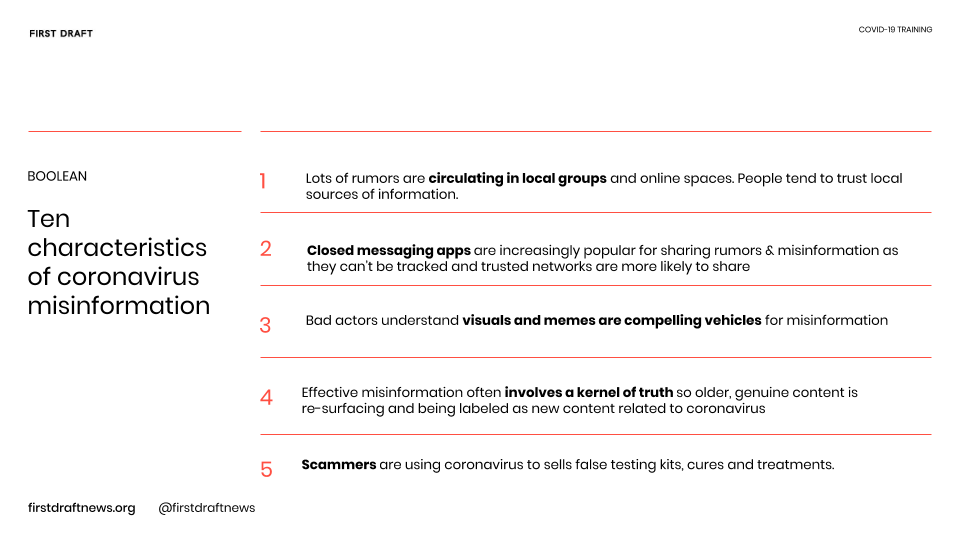

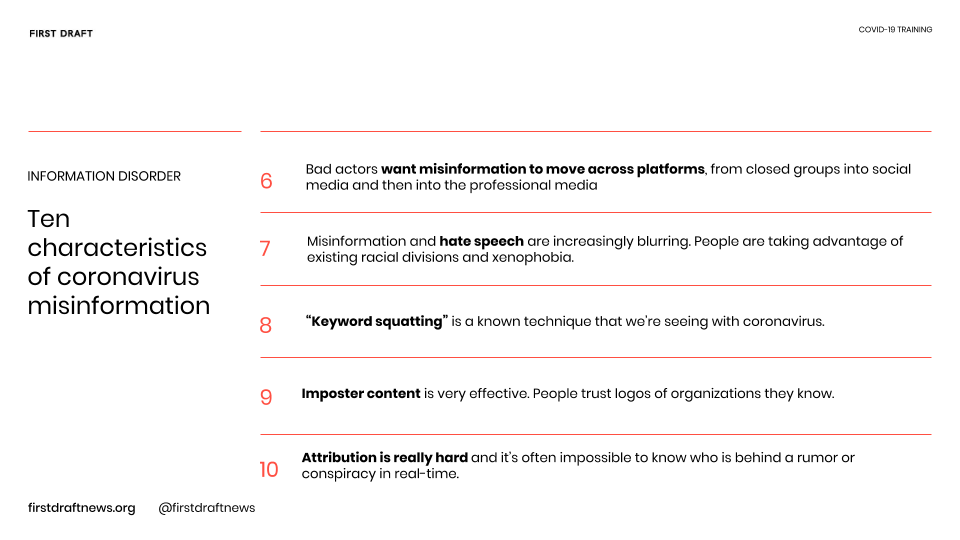

The ten characteristics of coronavirus misinformation

Every rumor or piece of false information is slightly different, but there are certain common characteristics of coronavirus misinformation that we are seeing again and again. So if you’re a reporter, these are some of the things to be on the lookout for. They can also help explain why misinformation travels so quickly.

Spotting patterns and templates

During scheduled events, like elections, we see the same techniques being used. For example, the trick of circulating information with the wrong polling date has been seen in almost every country. Once malicious actors know something works, it’s easier to re-use the idea than starting from scratch and risk failure.

The same has happened with coronavirus. We see the same patterns of stories and the same types of content traveling from country to country as people share information. These become ‘templates’ that are replicated across languages and platforms.

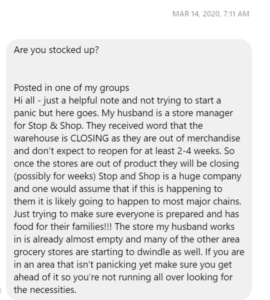

While you can find these ‘template’ examples on all platforms, during this coronavirus pandemic, we’re seeing a lot of these cut and paste templates on closed messaging apps and Facebook groups. It’s therefore hard to get a true sense of the scale of these messages, but anecdotally we’re seeing many people reporting similar wording and images.

The problem is that most of these are anchored in a grain of truth that makes them spread faster because it’s harder to determine what part of the information is false. Whether it’s scams, hoaxes, or the same video being shared with the same false context, be aware.

Lockdowns

Around the world, concerns spread about imminent military lockdowns, national quarantines and the rolling out of martial law following announcements for various stay-at-home or shelter-in-place orders. Different countries have different rules, and sometimes different cities have passed different ordinances at different times. This adds to the general confusion and can lead to people sharing information to try to make sure their loved ones are safe and prepared by sharing information.

For example, several Telegram broadcast channels and groups shared clips of tanks on trains, Humvees, and other military vehicles driving through towns and cities. One video, shared to a popular Telegram channel that has thousands of subscribers, claimed they had a recording of the “Military rolling into NYC”. However, the creator of the video says he was in FedExField, which is in Washington, D.C.

We saw the same kind of videos being shared on social media in Ireland and the UK, of alleged tanks on the street, road blockages by police and the military around mid-March. The videos were, for the most part, older and re-shared online out of context, or they were images from road accidents where the police had arrived. But because they fit into different online narratives of what was happening in other countries, people re-shared to warn each other of what was possibly on the horizon.

|

|

Because people were trying to keep each other safe, there were similar versions of rumors of lockdowns calling for people to make sure they’re stocked up and ready.

|

|

These messages left out essential information and capitalized on people’s fears. The reality for many cities in lockdown was that many essential shops were still open, people could go outside to exercise or buy food, and messages calling for people to be prepared only fuelled panic shopping.

On Facebook, a viral post from an Italian makeup influencer told two million followers that Americans were in a race to buy weapons in New York and other large metropolitan areas and that she had “fled” the city out of fear for her safety.

As countries and cities around the world took their own measures, we saw the same kind of reaction online over and over again. People were worried about how to stay safe and prepared.

|

|

|

|

Sometimes the confusion happens in the information vacuum between when people spot things happening around them and the time it takes for governments and institutions to explain what is happening.

|

|

| A social post online reporting the presence of military airplanes at London City Airport on March 25th, 2020. | A twitter post by the UK’s Army in London explaining the presence of military vehicles on the street on March 27th, 2020. |

If you see something that seems suspicious, plug it into a search engine. It’s very likely the same content has been debunked by a fact-checker or newsroom in another part of the world. BuzzFeed has a very comprehensive running list of hoaxes and rumors.

The ethics of closed groups

As the pandemic moved across the globe, we saw a great deal of conversation related to the virus moving to private messaging groups and channels. This might be because people feel less comfortable sharing information in public spaces at a time like this, or it might be that people turned to sources they feel they know and trust as fear and panic increased.

One of the consequences of this shift is that rumors circulated in spaces that are impossible to track. Many of these spaces are invite-only, or have end-to-end encryption, so not even the companies know what is being shared there.

But is there a public interest for journalists, fact-checkers, research and public health officials to join these spaces to understand what rumors are circulating so they can debunk them? What are the ethics of reporting on material you find in these spaces?

There are ethical, security and even legal challenges to monitoring content in closed spaces, as outlined by First Draft’s Essential Guide to Closed Groups. Here’s a shorter round-up based on some of the key questions journalists should consider:

Do you need to be in a private group to do coronavirus research and reporting?

Try looking for your information in open online spaces such as Facebook Pages before joining a closed group.

What is your intention?

Are you looking for rumors on cures and treatments, sources from a local hospital or leads on state-level responses? What you need for your reporting will determine what sort of information to look for and what channels or groups to join.

How much of your own information will you reveal?

Some Telegram groups want participants, not lurkers, and some Facebook Groups have questions you need to answer before you can join. How much will you share honestly?

How much will you share from these private sources?

People are looking for answers because they are afraid. If the group is asking about lockdowns and national travel bans, and the answers being shared are creating panic and fear, how will you share this publicly? Consider again your intent and if that community has a reasonable expectation of privacy before sharing screenshots, group names and personal identifiers such as usernames. Will publishing or releasing those messages harm the community you’re monitoring?

What’s the size of the closed group you are looking to join?

Facebook, WhatsApp, Discord, WeChat and Telegram have groups and channels of varying sizes. The amount of content being shared depends more on the number of active users than overall numbers. Observe how responsive the group is before joining.

If you are looking to publish a story with the information gathered, will you make your intention known?

Consider how this group may respond to a reporter, and how much identity-revealing information you are willing to publish from the group.

If you do reveal your intention, are you likely to get unwanted attention or abuse?

First Draft’s essential guide on closed message apps shows that journalists of color and women, for example, may face additional security concerns when entering into potentially hostile groups. If you decide to enter the group using your real identity, to whom will you disclose this information? Just the administrator or the whole group?

How much will you share of your newsgathering processes and procedures?

Will revealing your methodology encourage others to enter private groups to get ‘scoops’ on misinformation? Would that negatively impact these communities and drive people to more secretive groups? Will you need to leave the group after publication, or will you continue reporting?

Ultimately, people will search for answers wherever they can find them. As journalists, it’s important that those data voids are filled with facts and up-to-date information from credible sources. The question is how to connect community leaders with quality information, so fewer rumors and falsehoods travel in these closed spaces.

The importance of emotional skepticism

People like to feel connected to a ‘tribe’. That tribe could be made up of members of the same political party, parents that don’t vaccinate their children, activists concerned about climate change, or those belonging to a certain religion, race or ethnic group. Online, people tend to behave in accordance with their tribe’s identity, and emotion plays a big part in that, particularly when it comes to sharing.

Neuroscientists know that we are more likely to remember information that appeals to our emotions: stories that make us angry, sad, scared or laugh. Social psychologists are now running more experiments to test this question of emotion, and it seems that heightened emotionality is predictive of an increased belief in false information. You could argue that the whole planet is currently experiencing ‘heightened emotionality’.

False and misleading stories spread like wildfire because people share them. Lies can be very emotionally compelling. They also tend to be grounded in truth, rather than entirely made up. We are increasingly seeing the weaponization of context: the use of genuine content, but of a type that is warped and reframed. Anything with a kernel of truth is far more successful in terms of persuading and engaging people.

In February, an Australian couple was quarantined on a cruise ship off the coast of Japan. They developed a following on Facebook for their regular updates, and one day claimed they ordered wine using a drone. Journalists started reporting on the story, and people shared it. The couple later admitted that they posted it as a joke for their friends.

This might seem trivial, but mindless resharing of false claims can undermine trust overall. And for journalists and newsrooms, if readers can’t trust you on the small stuff, how can they trust you on the big stuff? So a degree of emotional skepticism is critical. It doesn’t matter how well trained you are in verification or digital literacy, it doesn’t matter whether you sit on the left or right of the political spectrum. Humans are susceptible to misinformation. During a time of increased fear and uncertainty, no-one is immune, which is why an awareness of how a piece of information makes you feel, is the most important lesson to remember. If a claim makes you want to scream, shout, cry, or buy something immediately, it’s worth taking a breath.

Reporting

Reporting on misinformation can be tricky. Just because you can cover a story, doesn’t always mean you should. Unfortunately, even well-intentioned reporting can add fuel to the fire and bring greater exposure to a story that might have otherwise faded away.

This section will explain the importance of tipping points in deciding when to report (and not to report). It will give advice on how to craft headlines carefully and precisely, to avoid amplifying a falsehood, using accusatory language or reducing trust. Finally, we’ll show you why filling ‘data voids’ is important in answering the questions posed by your audience (and avoiding misinformation from filling that void instead).

Understanding tipping points

When you see mis- and disinformation, your first impulse may be to immediately debunk – expose the falsehood, tell the public what’s going on and explain why it’s untrue. But reporting on misinformation can be tricky. The way our brains work means that we’re very poor at remembering what is true or false. Experimental research shows how easy it is for people to be told something is false, only for them to state it’s true when they’re asked about it at a later point. There are best practices for writing headlines, and for explaining that something is false, which we explore in the next section. Unfortunately, even when well-intentioned reporting, can add fuel to the fire and bring greater exposure to content that might have otherwise faded away. Understanding the tipping point is crucial in knowing when to debunk.

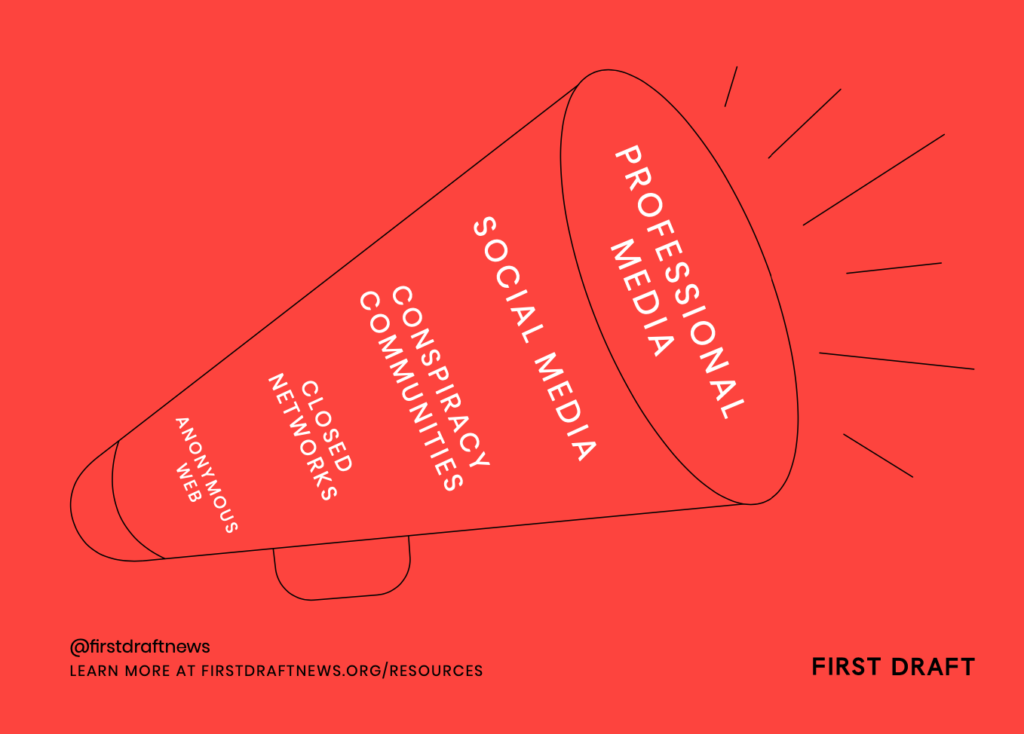

The Trumpet of Amplification

The illustration below is a visual reminder of the way false content can travel across different social platforms. It can make its way from anonymous message boards like 4Chan through private messaging channels on Telegram, WhatsApp and Twitter. It can then spread to niche communities in spaces like Reddit and YouTube, and then onto the most popular social media platforms. From there, it can get picked up by journalists who provide additional oxygen either by debunking or reporting false information.

Syracuse University professor Whitney Phillips has also written about how to cover problematic information online. Her 2018 Data & Society report, The Oxygen of Amplification: Better Practices for Reporting on Extremists, Antagonists, and Manipulators Online, explains: As Alice Marwick and Rebecca Lewis noted in their 2017 report, Media Manipulation and Disinformation Online, for “manipulators, it doesn’t matter if the media is reporting on a story in order to debunk or dismiss it; the important thing is getting it covered in the first place.”

“It is problematic enough when everyday citizens help spread false, malicious, or manipulative information across social media. It is infinitely more problematic when journalists, whose work can reach millions, do the same.”

The tipping point

In all scenarios, there is no perfect way to do things. The mere act of reporting always carries the risk of amplification and newsrooms must balance the public interest in the story against the possible consequences of coverage.

Our work suggests there is a tipping point when it comes to covering disinformation.

Reporting too early… |

Reporting too late… |

|---|---|

| can boost rumors or misleading content that might otherwise fade away. | means the falsehood takes hold and can’t be stopped – it becomes a “zombie rumor”, that just won’t die. |

Some key things to remember about tipping points:

- There is no one single tipping point – The tipping point can be measured when content moves out of a niche community and starts moving quickly on one platform, or crosses onto others. The more time you spend monitoring disinformation, the clearer the tipping point becomes – which is another reason for newsrooms to take disinformation seriously.

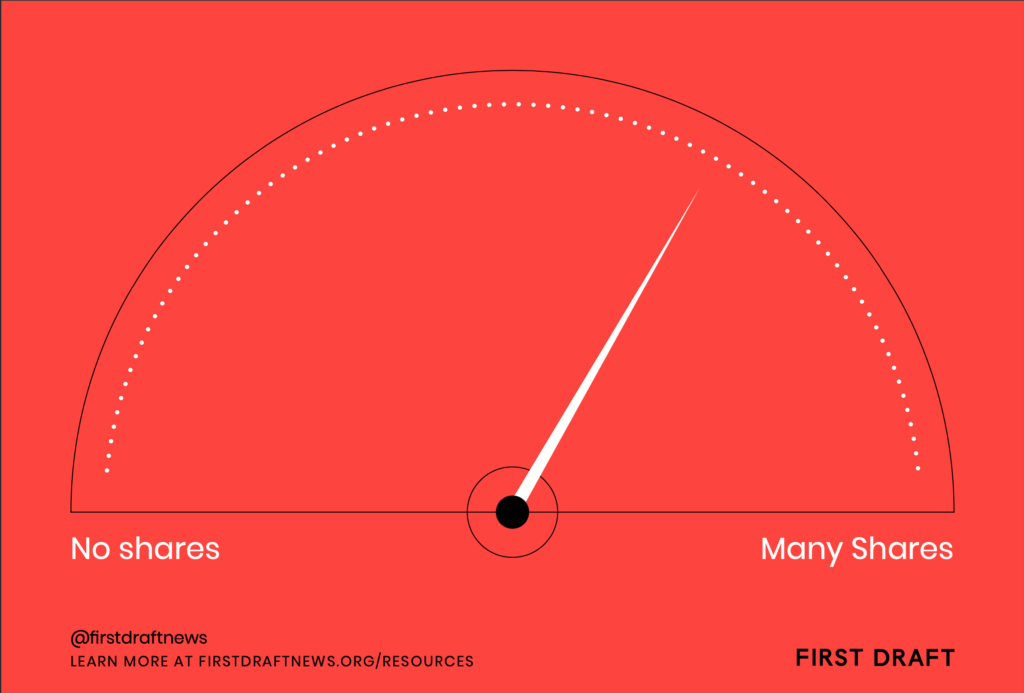

- Consider the spread – This is the number of people who have seen or interacted with a piece of content. It can be tough to quantify with the data available, which is usually just shares, likes, retweets, views, or comments. But it is important to try. Even small or niche communities can seem more significant online. If a piece of content has very low engagement, it might not be worth verifying or writing about.

- Collaboration is key – Figuring out the tipping point can be tough, so it could be a chance for informal collaboration. Different newsrooms can compare concerns about coverage decisions. Too often journalists report on rumors for fear they will be ‘scooped’ by competitors – which is exactly what agents of disinformation want.

Some useful questions to help determine the tipping point:

-

-

-

- How much engagement is the content getting?

- Is the content moving from one community to another?

- Is the content moving across platforms?

- Did an influencer share it?

- Are other journalists and news media writing about it?

-

-

Determining the tipping point is not an exact science, but the key thing is to pause and consider the above for debunking or exposing a story.

The importance of headlines

Headlines are incredibly important as they are often the only text from the article that readers see. In a 2016 study, computer scientists at Columbia University and the Microsoft Research-Inria Joint Centre estimated that 59% of links mentioned on Twitter are not clicked at all, confirming that people share articles without reading them first.

Newsrooms reporting on disinformation should craft headlines carefully and precisely to avoid amplifying the falsehood, using accusatory language or reducing trust.

Here we will share some best practice for writing headlines when reporting on misinformation, based on the Debunking Handbook by the psychologists John Cook and Stephan Lewandowsky and his colleagues.

Problem |

Solution |

| Familiarity Backfire Effect By repeating falsehoods in order to correct them, debunks can make falsehoods more familiar and thus more likely to be accepted as true. |

Focus on the facts Avoid repeating a falsehood unnecessarily while correcting it. Where possible, warn readers before repeating falsehoods. |

| Overkill Backfire Effect The easier information is to process, the more likely it is to be accepted. Less detail can be more effective. |

Simplify Make your content easy to process by keeping it simple, short and easy to read. Use graphics to illustrate your points. |

| Worldview Backfire Effect People process information in biased ways. When debunks threaten a person’s worldview, those most fixed in their views can double down. |

Avoid ridicule Avoid ridicule or derogatory comments. Frame debunks in ways that are less threatening to a person’s worldview. |

| Missing Alternatives Labeling something as false, but not providing an explanation often leaves people with questions. If a debunker doesn’t answer these questions, people will continue to rely on bad information. |

Provide answers Answer any questions that a debunk might raise. |

Filling data voids

Michael Golebiewski and Danah Boyd of Microsoft Research and Data & Society first used the term “data void” to describe search queries where “the available relevant data is limited, non-existent, or deeply problematic.”

In breaking news situations, write Golebiewski and Boyd, readers run into data voids when “a wave of new searches that have not been previously conducted appears, as people use names, hashtags, or other pieces of information” to find answers.

Newsrooms should think about Covid-19 questions or keywords readers are likely searching for, look to see who is creating content around these questions, and fill data voids with quality content.

This is the counterpart to figuring out what people are searching for using Google Trends. Reverse engineer the process: when people type in questions related to coronavirus, what do they find?

It’s important to figure out what Covid-19 questions readers are asking, and fill data voids with service journalism.

Verification

Verification is the process we have to go through to make sure something online is real, that it’s being used in the right context and that it’s from the time and place it claims. It’s just as important online as it is offline, you wouldn’t just publish a story you heard without checking it out first. We have to check out any material we find on social media regardless of the source, whether it’s a video on TikTok or a rumor on Reddit.

This session is designed to help you brush up your skills on investigating people’s digital footprints and verifying images through reverse image search. We’ll also bring it all to life with a case study that walks you through how we verified a video we found on social media.

A verification case study

Here we take a look at a case study involving a video posted on Twitter that claimed to show an Italian coronavirus patient escaping from a hospital. We discover that the clip is not all it seems by applying our verification process and the five pillars of provenance, source, date, location and motivation.

How to verify accounts using digital footprints

Social media is currently full of people who claim to have reliable medical advice. There are ways to check if someone is who they claim to be through their social media profile. Follow these basic techniques for digital footprinting and verifying sources online:

An example and three simple rules to follow

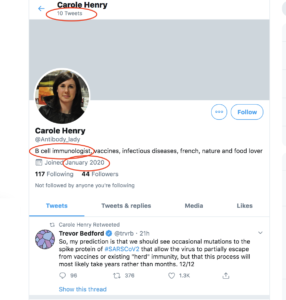

|

SOURCE: twitter.com |

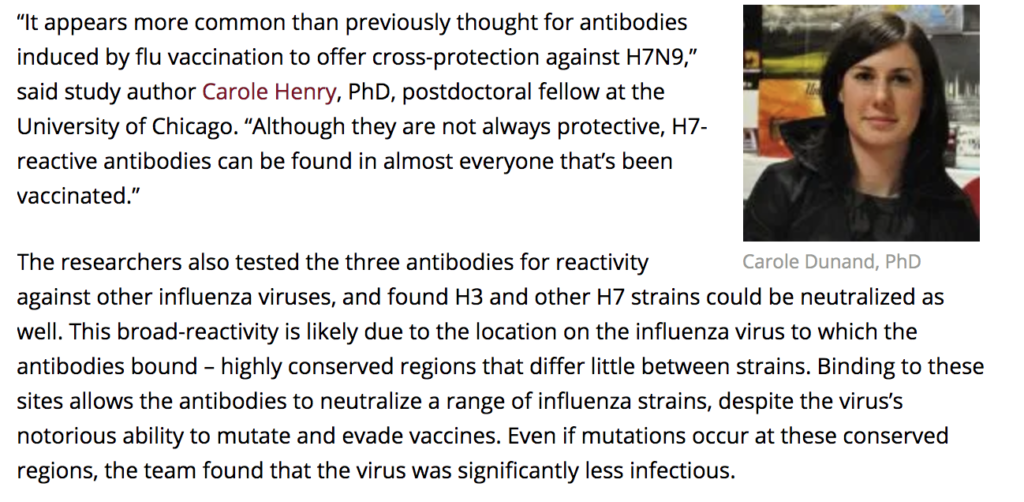

Let’s practice by verifying the Twitter profile of a medical professional: Carole Henry. Carole has been tweeting about Covid-19, and her bio says that she is a “B cell immunologist.”

But how can we be sure of her credentials? The profile doesn’t give us a lot of information: it doesn’t have the blue “verified” check; it doesn’t link to a professional or academic website; she only joined Twitter in January 2020; and she only has 10 tweets.

With a little searching, we can actually learn a lot about her.

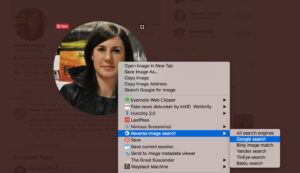

Rule #1: Reverse image search the profile picture

|

|

Reverse image searches are powerful because they give you a lot of information in a matter of seconds. We recommend you do reverse image searches with the RevEye Reverse Image Search extension, which you can download for Google Chrome or Firefox.

Once RevEye is installed, click on Carole’s profile picture to enlarge it. Next, right click the image, find “Reverse Image Search” in the menu, and select “Google search.”

A new tab should pop up in your browser. If you scroll to the bottom, there is a helpful section titled “Pages that include matching images.” Look through these.

|

|

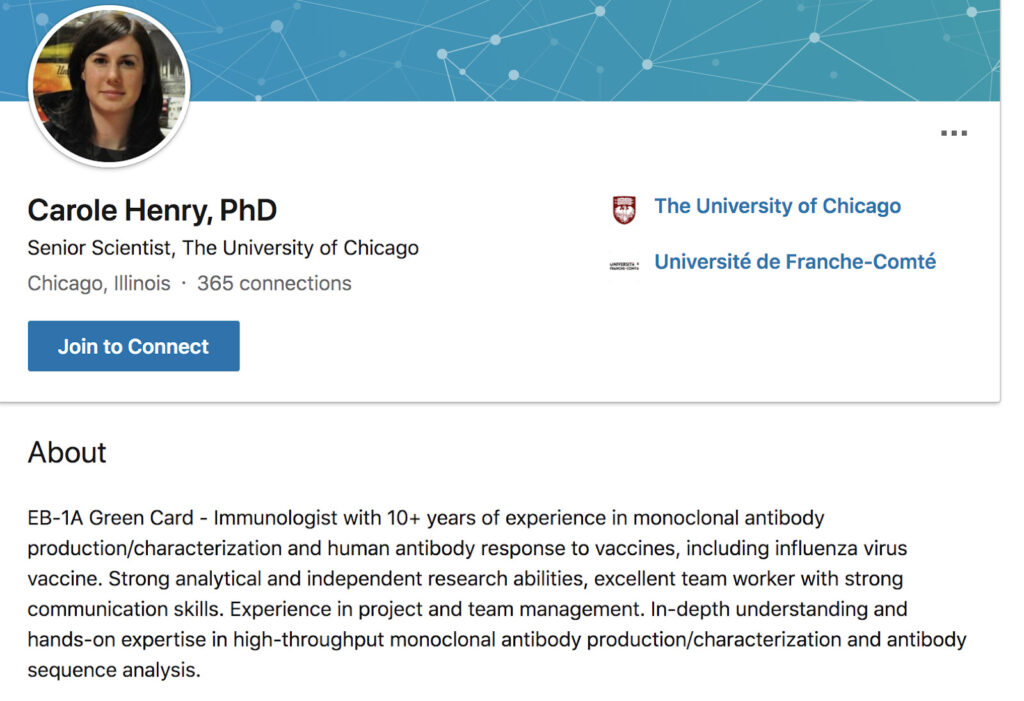

One of the first results looks like an article from the medical school of the University of Chicago. |

|

|

Scrolling down, we can see that same picture of Carole, and a quote that says she is a postdoctoral fellow at the university. Below the picture, it says her name is “Carole Dunand.” That is an interesting piece of info that we can use later. |

SOURCE: LinkedIn |

The reverse image search results also lead us to a Linkedin profile, that says she is a staff scientist at the University of Chicago with 10 years of experience as an immunologist. |

Rule #2: Check primary sources

|

SOURCE: Google.com |

Remember the journalism rule-of-thumb: always check primary sources when they are available. In this case, if we want to confirm that Carole Henry is a scientist at the University of Chicago, the primary source would be a University of Chicago staff directory. A quick Google search for “Carole Henry University of Chicago” leads to a page for the “Wilson Lab.” The page has a University of Chicago web address, and also lists her as staff.

Rule #3: Find contact information

|

SOURCE: http://profiles.catalyst.harvard.edu/ |

It may be unlikely, but there is always a chance that the original Twitter profile we found is just someone posing as Carole Henry from the University of Chicago. For a truly thorough verification, look for contact information so you can reach out to the source.

Remember that other name we found – “Carole Dunand”? A search for “Carole Dunand University of Chicago” leads right to another staff directory, this time with her email address.

Here’s some other questions that will help you verify accounts

- When was the account created?

- Can you find that person online anywhere else?

- Who else do they follow?

- Are there any other accounts with that same name?

- Can you find any contact details?

Verify images with reverse image search

A picture is worth a thousand words, and when it comes to disinformation it can also be worth a thousand lies. One of the most common types of misinformation we see at First Draft looks like this: genuine photographs or videos, that have not been edited at all, but which get reshared to fit a new narrative.

But with a few clicks, you can verify these types of images when they are shared online and in messaging groups.

Just like you can “Google” facts and claims, you can ask a search engine to look for similar photos and even maps on the internet to check if they’ve been used before. This is called a ‘reverse image search’ and can be done with search engines like Google, Bing, Russian website Yandex, or other databases such as TinEye.

In January, Facebook posts receiving thousands of shares featured the photograph (embedded below) and claimed the people in the photo were coronavirus victims in China. A quick look at the architecture shows that it looks very European, which might raise suspicion. Then if we take the image, run it through a reverse image search engine, and look for previous places it has been published, we find the original from 2014. It was an image, originally published by Reuters, of an art project in Frankfurt, which saw people lie in the street in remembrance of the victims of a Nazi concentration camp.

|

A photograph of an art project in 2014 in Germany that got shared on Facebook in 2020 to falsely claim the people in the photo were coronavirus victims in China.SOURCE: Kai Pfaffenbach Reuters |

Here’s some tools you can use:

- Desktop: RevEye’s Plugin allows you to search for any image on the internet without leaving your browser.

- Phone: TinEye allows you to do the same thing on your phone

The whole process takes a matter of seconds, but it’s important to remember to check any time you see something shocking or surprising.

In conclusion

As we work together to tackle the information disorder around coronavirus, it’s clear that untangling facts from misleading or incorrect information will be harder than ever. We hope this guide has given you a better understanding of the infodemic, and provided some tools and techniques to help you monitor and verify information online. Ultimately, as reporters, researchers and journalists we hope that this course will help you provide reliable information to your audiences, at the time they need it most.

Continue to follow firstdraftnews.org for the latest news and information. In particular our Resources for Reporters section where regularly with new tools, guides, advice, FAQs, Webinars and other materials, to help you make informed judgements and produce credible coverage during Covid-19.

If you haven’t already, you can stay in touch with our daily and weekly newsletter service and on Twitter, Instagram and Facebook

Reading list

Overview

- READING – Whitney Phillips, 2020 – The Internet is a Toxic Hellscape but we can fix it

- READING – Claire Wardle, Scientific American 2019 – Misinformation has created a new world disorder

- READING – Neiman Lab, 2019 -Galaxy brain: The neuroscience of how fake news grabs our attention, produces false memories, and appeals to our emotions

- RESOURCES – Nieman Lab, 2020 – “Rated false”: Here’s the most interesting new research on fake news and fact-checking

- PODCAST – Wharton, University of Pennsylvania, 2018 – Why Fake News Campaigns are So Effective

- PODCAST – Little Atoms, 2020 – Governments V The Robots: Fake news, post-truth, and all that jazz

- PODCAST – Hyperallergic 2019 – The Political Life of Memes with An Xiao Mina

- VIDEO – BBC 2019 – Flat Earth: How did YouTube help spread a conspiracy theory?

- VIDEO – NYTimes 2019 – Deepfakes: Is This Video Even Real? | Claire Wardle

- VIDEO – TED 2019 -How you can help transform the internet into a place of trust | Claire Wardle

- VIDEO – TED 2012 – How you can separate fact and fiction online | Markham Nolan

- BOOK – Hugo Mercier -The Enigma of Reason

- BOOK – Limor Shifman 2014 – Memes in Digital Culture

- BOOK – Ryan M. Milner 2016 -The World Made Meme

- GAME – DROG, 2019 – Get Bad News

- GAME – Yle, Finish Broadcasting Company 2019 – Troll Factory

- FD guide on Information Disorder

- JIGSAW – The Disinformation Issue

Monitoring

- FD Guide to Monitoring

- TWITTER LIST – Disinformation Essentials

- How to investigate health misinformation (and anything else) using Twitter’s API

- How are social media platforms responding to the “infodemic”?

- PODCAST – NPR Hidden Brain, 2019 – Facts Aren’t Enough: The Psychology Of False Beliefs

- FD Guide on Closed Groups, Messaging Apps & Online Ads

Verification

- FD Verification Guide

- BOOK – Craig Silverman Verification Handbook

- RESOURCES – Global Investigative Journalism Network 2019

- InVID Reverse Image search

- Amnesty International Citizen Evidence Lab how to use InVID-WeVerify

- Guide to using reverse image search for investigations – Bellingcat

- Tool to help journalists spot doctored images is unveiled by jigsaw

- How to use your phone to spot fake images surrounding the U.S.-Iran conflict

- Interactive geolocation challenge

- Interactive Observation Challenge

Reporting

- FD Guide Responsible Reporting in an Age of Information Disorder

- Tips for reporting on Covid-19 and slowing the spread of misinformation

- How journalists can help stop the spread of the coronavirus outbreak

- Misinformation and Its Correction: Continued Influence and Successful Debiasing

- Media, Manipulation and Disinformation Online

- Mapping Coronavirus Responsibly

- Asian American Journalists Association calls on news organizations to exercise care in coverage of the coronavirus outbreak

- Data Voids: Where Missing Data can easily be exploited

- How to stay mentally well while reporting on the coronavirus

- How journalists can deal with trauma while reporting on Covid-19

- Mental health and infectious outbreaks: insights for coronavirus coverage

- Covering Coronavirus: Resources for Journalists (Dart Center)

- How journalists can fight stress from covering the coronavirus (Poynter)

- Coronavirus: 5 ways to manage your news consumption in times of crisis (The Conversation)

- How to Deal With Coronavirus If You Have OCD or Anxiety (Vice)

- Self-Care Amid Disaster (Dart Center)

- Managing Stress & Trauma on Investigative Projects (Dart Center)

- Journalism and Vicarious Trauma (First Draft)

- For reporters covering stressful assignments, self-care is crucial (Center for Health Journalism)

- Editor Perspective: Self-Care Practices and Peer Support for the Newsroom (Dart Center)

- Coronavirus: how to work well from home (Leapers)

Glossary

An evolving list of words to talk about disinformation

A mistake many of us make is that because we use Facebook and Twitter, it is easy to understand and report on the platforms. We hope that this glossary demonstrates the complexities of this space, especially in a global context.

The glossary includes 62 words and you can use CMD + F (Control Find) to search through the content or just scroll down.

4chan

An anonymous message board that began in 2003 by Christopher Poole, also known as “moot” online, who modeled it after Japanese forums and imageboards. The threaded-discussion platform has since spiraled out to include random innocuous topics, as well as pornography, racism, sexism, toxic masculinity and disinformation campaign coordination. 4chan is credited for having fueled interest and the sharing of memes and sees itself as a champion of free, anonymous speech. Poole started working for Google in 2016. Here is his TED talk from 2010 where he explains the platform founding and why it’s an important place for free speech.

Amplification

When content is shared on the social web in high numbers, or when the mainstream media gives attention or oxygen to a fringe rumor or conspiracy. Content can be amplified organically, through coordinated efforts by motivated communities and/or bots, or by paid advertisements or “boosts” on social platforms. First Draft’s Rory Smith and Carlotta Dotto wrote up an explainer about the science and terminology of bots.

Amplification and search engines

It is sometimes the goal of bad actors online to create and distribute conspiracy campaigns. They look to inadvertently game search engines by including words and phrases taken from certain message boards and trying to create and drive trends and provide maximum exposure to the fringe, and often toxic movements and ideology. See the reporting tips section from Data & Society’s “The Oxygen of Amplification.” The full report is a must-read for any working journalist.

Algorithm

Algorithms in social and search platforms provide a sorting and filtering mechanism for content that users see on a “newsfeed” or search results page. Algorithms are constantly adjusted to increase the time a user spends on a platform. How an algorithm works is one of the most secretive components to social and search platforms; there has been no transparency to researchers, the press or the public. Digital marketers are well-versed on changes to the algorithm and it’s these tactics — using videos, “dark posts,” tracking pixels, etc. — that also translates well for disinformation campaigns and bad actors.

Anonymous message boards

A discussion platform that does not require people who post to publicly reveal their real name in a handle or username, like Reddit, 4chan, 8chan, and Discord. Anonymity can allow for discussions that might be more honest, but can also become toxic, and often without repercussions to the person posting. Reporting recommendation: as with 4chan, if you choose to include screenshots, quotes, and links to the platform, know that published reports that include this information has the potential to fuel recruitment to the platform, amplify campaigns that are designed to attack reporters and news organizations or sow mischief and confusion, and inadvertently create search terms/drive search to problematic content.

Analytics (sometimes called “metrics”)

Numbers that are accumulated on every social handle and post, and sometimes used to analyze the “reach” or extent to which how many other people might have seen or engaged with a post.

API

An API or application programming interface is when data from one web tool or application can be exchanged with, or received by another. Many working to examine the source and spread of polluted information depend upon access to social platform APIs, but not all are created equal and the extent of publicly available data varies from platform to platform. Twitter’s open and easy-to-use API has enabled more research and investigation of its network, which is why you are more likely to see research done on Twitter than on Facebook.

Artificial intelligence (“AI”)

Computer programs that are “trained” to solve problems. These programs “learn” from data parsed through them, adapting methods and responses in a way that will maximize accuracy. As disinformation grows in its scope and sophistication, some look to AI as a way to effectively detect and moderate concerning the content, like the Brazilian fact-checking organization Aos Fatos’s chatbot Fátima, which replies to questions from the public with fact checks via Facebook Messenger. AI can also contribute to the problem of things like “deep fakes,” and enabling disinformation campaigns that can be targeted and personalized much more efficiently.² Reporting recommendation: WITNESS has led the way on understanding and preparing for “synthetic media” and “deep fakes.” See also the report by WITNESS’ Sam Gregory and First Draft “Mal-uses of AI-generated Synthetic Media and Deepfakes: Pragmatic Solutions Discovery Convening.”

Automation

The process of designing a “machine” to complete a task with little or no human direction. Automation takes tasks that would be time-consuming for humans to complete and turns them into tasks that are quickly completed. For example, it is possible to automate the process of sending a tweet, so a human doesn’t have to actively click “publish.” Automation processes are the backbone of techniques used to effectively manufacture the amplification of disinformation. First Draft’s Rory Smith and Carlotta Dotto wrote up an explainer about the science and terminology of bots.

Boolean queries

A combination of search operators like “AND,” “OR,” and “-” that filter search results on a search engine, website or social platform. Boolean queries can be useful for topics you follow daily and during breaking news.

Bots

Social media accounts that are operated entirely by computer programs and are designed to generate posts and/or engage with content on a particular platform. In disinformation campaigns, bots can be used to draw attention to misleading narratives, to hijack platforms’ trending lists and to create the illusion of public discussion and support.⁴ Researchers and technologists take different approaches to identify bots, using algorithms or simpler rules based on a number of posts per day. First Draft’s Rory Smith and Carlotta Dotto wrote up an explainer about the science and terminology of bots.

Botnet

A collection or network of bots that act in coordination and are typically operated by one person or group. Commercial botnets can include as many as tens of thousands of bots.

Comments

Comments that are added to a social post that gets included in “engagement” figures in analytics. Reporting recommendation: when reviewing polarizing topics related to your beat, it is often the comments where you will find other people to follow and terminology that might inform your Boolean queries and other online searches.

Conspiracy theories

The BBC lists three ingredients for why and how a conspiracy theory takes hold:

- Conspirator: a group like ‘big pharma’, the freemasons, skull and bones, a religious group. Defining an enemy and accepting that the enemy will always be shady and secret.

- The evil plan: that even if you destroy the conspirator, their evil plan will live on with a goal of world domination.

- Mass manipulation: thinking about the strategies and power the conspirators have to keep their sinister plan or identity hidden.

With the coronavirus we’re seeing conspiracies linked to the origin of the virus, for example, it’s a bio-weapon created by the Chinese, or the virus was created in a lab by Bill Gates.

Cyborg

A combination of artificial and human tactics, usually involving some kind of automation, to amplify online activity. Reporting recommendation: this is a new-ish method by bad actors to give the public appearance of authentic activity from a social account. It is important to distinguish in your reporting if online activity appears to be a cyborg, sock puppet, bot or human. Not every prolific account is a bot, but conflating bot with a cyborg is inaccurate and could bring doubt and criticism to your reporting.

Dark ads

Advertisements that are only visible to the publisher and their target audience. For example, Facebook allows advertisers to create posts that reach specific users based on their demographic profile, page ‘likes’, and their listed interests, but that are not publicly visible. These types of targeted posts cost money and are therefore considered a form of advertising. Because these posts are only seen by a segment of the audience, they are difficult to monitor or track.⁸

Deepfakes

Fabricated media produced using artificial intelligence. By synthesizing different elements of existing video or audio files, AI enables relatively easy methods for creating ‘new’ content, in which individuals appear to speak words and perform actions, which are not based on reality. Although still in their infancy, it is likely we will see examples of this type of synthetic media used more frequently in disinformation campaigns, as these techniques become more sophisticated.⁹ Reporting recommendation: WITNESS has led the way on understanding and preparing for “synthetic media” and “deepfakes.” See also the report by WITNESS’ Sam Gregory and First Draft “Mal-uses of AI-generated Synthetic Media and Deepfakes: Pragmatic Solutions Discovery Convening.”

Deplatform

Removing an account from a platform like Twitter, Facebook, YouTube, etc. The goal is to remove a person from a social platform to reduce their reach. Casey Newton writes that there is now “evidence that forcing hate figures and their websites to continuously relocate is effective at diminishing their reach over time.”

Discord

An application that began in 2015 designed primarily for the connecting gaming community through conversations held on “servers.” Reporting recommendation: Many servers require permission to access and ask a series of questions before allowing new members into the community. As a journalist, you’ll need to determine what you are comfortable with in terms of how you answer the questions — will you answer truthfully and risk not being let in, or worse doxed? Or will you be vague and therefore not represent yourself as you would in person? Your newsroom also needs to establish what is and isn’t allowed when reporters include information published by anonymous personas online — is the information be used for deep background only, will you link directly to the information, name handles? Remember that these spaces can be incredibly toxic and that communities have been known to threaten and harass people in real life.

Discovery

Methods used through a combination of tools and search strings to find problematic content online that can inform and even direct reporting.

Disinformation campaign

A coordinated effort by a single actor or group of actors, organizations or governments to foment hate, anger, and doubt in a person, systems and institutions. Bad actors often use known marketing techniques and to the platforms as they are designed to work to give agency to toxic and confusing information, particularly around critical events like democratic elections. The ultimate goal is to work messaging into the mainstream media.

Dormant account

A social media account that has not posted or engaged with other accounts for an extended period of time. In the context of disinformation, this description is used for accounts that may be human- or bot-operated, which remain inactive until they are ‘programmed’ or instructed to perform another task.¹⁰ Sometimes dormant accounts are hijacked by bad actors and programmed to send coordinated messages.

Doxing or doxxing

The act of publishing private or identifying information about an individual online, without his or her permission. This information can include full names, addresses, phone numbers, photos and more.¹¹ Doxing is an example of malinformation, which is accurate information shared publicly to cause harm.

Disinformation

False information that is deliberately created or disseminated with the express purpose to cause harm. Producers of disinformation typically have political, financial, psychological or social motivations.

Encryption

The process of encoding data so that it can be interpreted only by intended recipients. Many popular messaging services like Signal, Telegram, and WhatsApp encrypt the texts, photos, and videos sent between users. Encryption prevents governments and other lurkers from reading the content of intercepted messages. Encryption also thwarts researchers and journalists from attempting to monitor mis- or disinformation being shared on the platform. As more bad actors are de-platformed and the messaging becomes more volatile and coordinated, these conversations will be had on closed messaging apps where law enforcement, the public and researchers and journalists who are trying to understand the motivations and messaging of these groups will no longer be able to access.

Engagement

Numbers on platforms, like Facebook and Twitter, that show publicly the number of likes, comments, and shares. Marketing organizations use services like Parse.ly, CrowdTangle, NewsWhip, etc., to measure interest in a brand, newsrooms began to use these tools to understand audience interest and trends, and now some journalists use these same tools to see where toxic messaging and bad actors might reach a tipping point for reporting on a topic or developing story. See also: Claire Wardle’s Trumpet of Amplification for thinking about a “tipping point,” that is, where enough people have seen a topic or term so that reporting on it helps the public to understand, rather than accelerating the reach of a topic or term by prematurely reporting on it.

Facebook Graph Search

A functionality that ran for six years from 2014 to June 2019 on Facebook that allowed people and online investigators the ability to search for others on the platform and filter by criteria like check-ins, being tagged in photos, likes, etc. Other tools were built on the technology and have left online investigations in the lurch. The ethical debate began with human-rights investigators saying the technology was an encroachment privacy, and online investigators and journalists perplexed for how to surface the same information. There was a similar outcry among journalists and the OSINT community when Google-owned Panoramio was shuttered in November 2017.

Facebook Ad Transparency

Facebook’s effort to create more transparency about what ads are circulating on the platform and to whom. Now you can search its advertising database globally for ads about social issues, elections or politics. The database can be useful for tracking how candidates, parties and supporters use Facebook to micro-target voters and to test messaging strategies.

Fake followers

Anonymous or imposter social media accounts created to portray false impressions of popularity about another account. Social media users can pay for fake followers as well as fake likes, views, and shares to give the appearance of a larger audience. For example, one English-based service offers YouTube users a million “high-quality” views and 50,000 likes for $3,150. The number of followers can build a cache for a profile, or give the impression that it is a real account.

Information disorder

A phrase created by Claire Wardle and Hossein Derakshan to place into context the three types of problematic content online:

- Mis-information is when false information is shared, but no harm is meant.

- Dis-information is when false information is knowingly shared to cause harm.

- Mal-information is when genuine information is shared to cause harm, often by moving private information into the public sphere.

One of the few US platforms allowed in China, LinkedIn can be a good starting point for digital footprinting an online source. Bellingcat published a useful tipsheet on how to get the most out of this platform.

Malinformation

Genuine information that is shared to cause harm. This includes private or revealing information that is spread to harm a person or reputation.

Manufactured amplification

When the reach or spread of information is boosted through artificial means. This includes human and automated manipulation of search engine results and trending lists and the promotion of certain links or hashtags on social media.¹⁷ There are online price lists for different types of amplification, including prices for generating fake votes and signatures in online polls and petitions, and the cost of downranking specific content from search engine results.

Meme

Coined by biologist Richard Dawkins in 1976, is an idea or behavior that spreads person-to-person throughout a culture by propagating rapidly, and changing over time. The term is now used most frequently to describe captioned photos or GIFs, incubated on 4chan and have now spread online. Take note: Memes are powerful vehicles of disinformation and often receive more engagement than the news article on the same topic from a mainstream outlet.

Microtargeting

The ability to identify a very narrow segment of the population, in this case on a social platform, and send specific messaging to that group. One of the biggest problems identified in the information that was exchanged online during the lead up to the 2016 US Presidential election is the ability for political campaigns and disinformation agents to place wedge issues into the feeds of Facebook users. Facebook has since removed some of the category selections in the ad campaign area of the site, like “politics.” More on microtargeting and “psychographic microtargeting.”

Misinformation

Information that is false, but not intended to cause harm. For example, individuals who don’t know a piece of information is false may spread it on social media in an attempt to be helpful.

Normie

Online slang to mean a person who consumes mainstream news, online platforms and follows popular opinion. Not a compliment.

OSINT

An acronym that means open-source intelligence. Intelligence agents, researchers, and journalists investigate and analyze publicly available information to confirm or refute assertions made by governments, verify the location and time of day from photos and videos, among many other operations. OSINT online communities are incredibly helpful when it comes to exploring and explaining new tools, how they arrived at a conclusion about an investigation and often enlist help for work to verify information, as Bellingcat did to find the whereabouts of a wanted criminal in the Netherlands in March 2019. Sixty people helped with that investigation in one day on Twitter.

A threaded discussion message board that started in 2005 and requires registration to post. Reddit is the fifth most-popular site in the US, with 330 million registered users, called “redditors,” who post in subreddits. The subreddit, “the_Donald,” was one of the most active and vitriolic during the 2016 US election cycle.

Satire

Writing that uses literary devices such as ridicule and irony to criticize elements of society. Satire can become misinformation if audiences misinterpret it as fact. There is a known trend of disinformation agents labeling content as satire to prevent it from being flagged by fact-checkers. Some people, when caught, cling to the label of satire, as did a teacher in Florida who was discovered to have a racist podcast.

Scraping

The process of extracting data from a website without the use of an API. It is often used by researchers and computational journalists to monitor mis- and disinformation on different social platforms and forums. Typically, scraping violates a website’s terms of service (i.e., the rules that users agree to in order to use a platform). However, researchers and journalists often justify scraping because of the lack of any other option when trying to investigate and study the impact of algorithms. Reporting recommendation: newsrooms need to establish what it will and will not accept when it comes to information that breaks the rules of the website where the information was found and/or downloaded.

Sock puppet

An online account that uses a false identity designed specifically to deceive. Sock puppets are used on social platforms to inflate another account’s follower numbers and to spread or amplify false information to a mass audience. The term is considered to be synonymous with the term “bot,” however, not all sock puppets are bots. The Guardian explains a sock puppet as a fictional persona created to bolster a point of view and a troll as someone who relishes in not hiding their identity.

Shallow fakes

Low-quality manipulations to change the way a video plays. Awareness of shallow fakes increased in April and May 2019 when a video of US House Speaker Nancy Pelosi circulated online was slowed down to give the illusion that she was drunk while speaking at a recent event. Shallow fake manipulation is more concerning at present than deep fakes as free tools are available to subtly alter a video and are quick to produce. Reporting recommendations: enlist the help of a video forensicist when a video trends and appears to be out of character with the person featured.

Shitposting

The act of throwing out huge amounts of content, most of it ironic, low-quality trolling, for the purpose of provoking an emotional reaction in less Internet-savvy viewers. The ultimate goal is to derail productive discussion and distract readers.

Spam

Unsolicited, impersonal online communication, generally used to promote, advertise or scam the audience. Today, spam is mostly distributed via email, and algorithms detect, filter and block spam from users’ inboxes. Similar technologies to those implemented to curb spam could potentially be used in the context of information disorder, or at least offer a few lessons in which to learn.

Snapchat

The mobile-only multimedia app started in 2011. Snapchat has had its most-popular features like stories, filters, lenses and stickers, imitated by Facebook and Instagram. The app has 203 million daily active users, and estimates that 90 percent of all 13-24 year-olds and 75 percent of all 13-34-year-olds in the U.S. use the app. Snaps are intended to disappear, which has made the app popular, but screenshots are possible. In 2017, Snapchat introduced Snapmaps, however, there’s no way to contact the owner of the footage as user information is unclickable. Sometimes the user has their real name or a similar naming convention on another platform. It’s also difficult to search by tag unless you’re using a social discovery tool like NewsWhip’s Spike. Reporting recommendation: Snapmaps might be most useful to confirm activity at a breaking-news event, but not necessarily use the information in a published report.

Synthetic Media

A catch-all term for the artificial production, manipulation, and modification of data and media by automated means, especially through the use of artificial intelligence algorithms, such as for the purpose of misleading people or changing an original meaning.

Terms of service

The rules established by companies of all kinds for what is allowed and not allowed on its service. Platforms like Facebook and Twitter have long, ever-changing terms of service and have been criticized for unevenly implementing repercussions when someone breaks their rules. Reporting recommendation: newsrooms need to establish what it will and will not accept when it comes to information that is taken from a platform that breaks its rules.

TikTok

Launched in 2017, as a rebrand of the app Music.ly by the Chinese company ByteDance is a mobile-app video platform. Wired reported in August 2019 that TikTok is fuelling India’s hate speech epidemic. Casey Newton has great distillation for what’s at stake and Facebook using the app’s growth in popularity as a “crowded market” in an appeal to lawmakers to avoid regulation.

Troll

Used to refer to any person harassing or insulting others online. While it has also been used to describe human-controlled accounts performing bot-like activities, many trolls prefer to be known and often use their real name.

Trolling

The act of deliberately posting offensive or inflammatory content to an online community with the intent of provoking readers or disrupting the conversation.

Troll farm

A group of individuals engaging in trolling or bot-like promotion of narratives in a coordinated fashion. One prominent troll farm was the Russia-based Internet Research Agency that spread inflammatory content online in an attempt to interfere in the U.S. presidential election.

Two-factor authentication (2FA)

A second way to identify yourself to an app or when logging into a website, and is a more secure way to access your logins. It is usually associated with your mobile phone number where you will receive an SMS with a security code that you enter at the prompt to be given access to the app or site. This step can be annoying, but so can getting hacked because of a weakness in your protocols. Reporting recommendation: protect yourself and your sources by setting up two-factor authentication on every app and website (especially password manager and financial sites) that offers the service. It’s also recommended to use a free password manager like LastPass, long passwords of 16 characters or more, a VPN and browsing in incognito when viewing toxic information online (Chrome, Firefox).

Verification

The process of determining the authenticity of information posted by unofficial sources online, particularly visual media.²⁵ It emerged as a new skill set for journalists and human rights activists in the late 2000s, most notably in response to the need to verify visual imagery during the “Arab Spring.” Fact-checking looks at official records only, not unofficial or user-generated content, although fact-checking and verification often overlap and will likely merge.

Viber

Started in 2010 by Japanese company Rakuten Inc., Viber is a messaging app similar to WhatsApp that is associated with a phone number and also has desktop accessibility. The app added end-to-end encryption to one-to-one and group conversations where all participants had the 6.0 or higher release — for encryption to work, you’re counting on everyone to have updated their app. Viber has 250 million users globally, compared to WhatsApp with 1.6 billion users, and is most popular in Eastern Europe, Russia, the Middle East, and some Asian markets.

VPN, or virtual private network

Used to encrypt a user’s data and conceal his or her identity and location. A VPN makes it difficult for platforms to know where someone pushing disinformation or purchasing ads is located. It is also sensible to use a VPN when investigating online spaces where disinformation campaigns are being produced.

Started in 2011 as a WhatsApp-like messaging app for closed communication between friends and family in China, the app is now relied upon to interact with friends on Moments (a feature like Facebook timeline), reading articles sent from WeChat Public Accounts (public, personal or company accounts that publish stories), calling a taxi, booking movie tickets and paying bills with a single click. The app, which has 1 billion users, is also reported to be a massive Chinese government surveillance operation. Reporting recommendation: WeChat is popular with Chinese immigrants globally, so if your beat includes immigration, it’s important to understand how the app works and how information gets exchanged.

Wedge Issue

These are controversial topics that people care about, and have strong feelings about. Disinformation is designed to trigger a strong emotional response so people share it — either out of outrage, fear, humor, disgust, love or anything else from the whole range of human emotions. The high emotional volatility of these topics makes them a target for agents of disinformation to use them as a way to get people to share information without thinking twice. For example politics, policy, the environment, refugees, immigration, corruption, vaccines, women’s rights, etc.

With an estimated 1.6 billion users, WhatsApp is the most popular messaging app, and the third-most-popular social platform following Facebook (2.23 billion) and YouTube (1.9 billion) active monthly users. WhatsApp launched in 2009 and Facebook acquired the app in February 2014. In 2016 added end-to-end encryption, however, there were data breaches by May 2019, which has made users nervous about privacy protection. First Draft was the first NGO to have API access to the platform with our Brazilian election project Comprova. Even with special access, it was difficult to know where disinformation started and where it might go next.

Zero-rating

Telecoms in the U.S. and much of the Western world have phone, text, and data bundled into one, relatively low-cost plan. South America, Africa, and the Asia-Pacific region pay for each of those features separately. Platforms, most pronouncedly Facebook, have negotiated “zero-rating” with mobile carriers in these regions that allow its platforms — Facebook, Facebook Messenger, Instagram, and WhatsApp — to be used outside of the data plan. These apps, when negotiated as “zero-rating,” are free to use. The largest issue with this plan for users is that Facebook is the internet; the incentive to stay “on platform” is high. Many people share complete copy-and-paste articles from news sites into WhatsApp, and fact-checking this information outside of the platform requires data.

Thank yous

This course has been designed by Claire Wardle, Laura Garcia and Paul Doyle. It was dreamed up by our Managing Director Jenni Sargent.

Thank you to many members of the First Draft team. People stepped up to record voiceovers under blankets in bathrooms, work long hours over a weekend and/or had to fit these course-related demands on top of other crucial work. Contributors include Jacquelyn Mason, Anne Kruger, Akshata Rao, Alastair Reid, Jack Sargent, Diara J Townes, Shaydanay Urbani, and Madelyn Webb. We also repurposed incredible previous work done by Victoria Kwan, Lydia Morrish, and Aimee Rinehart.

We also want to thank the amazing design and copywriting team who helped us make this course: Manny Dhanda, Jenny Fogarty, and Matt Wright.