Electionland was likely one of the largest social newsgathering operations ever performed over the course of one day. More than 600 journalism students and experienced journalists monitored social media for any kind of indicator of voter suppression on 8 November 2016.

ProPublica, Google News Lab, Univision, WNYC, the CUNY Graduate School of Journalism and the USA Today Network teamed up to make Electionland a reality. The coalition of organisations combined 1000-plus verified social posts with supporting information from data-sets and boots-on-the-ground reporting. There was no evidence of wide-spread voter suppression or vote rigging on Election Day.

I led the Feeder Desk on behalf of the First Draft Coalition on Election Day. The Feeder operation included professional journalists from the First Draft Coalition and beyond, staff from social platforms and more than 600 journalism students across the country. If a claim of voter suppression was reported on social media, our aim was to find it. We’ll be posting plenty more resources from this project over the coming weeks and months but I wanted to start with setting out how we tackled such a huge challenge.

Our approach

As yet, there is no single tool out there for monitoring social media which can work as quickly, accurately and thoroughly as a set of human eyes. For a project like this, where we needed to identify any incident that prevented people from casting their votes, we needed to see everything we possibly could. We needed to see it free of an algorithm that promoted posts due to any reasons like popularity, shares or momentum. We also needed to do it systematically, catching as much as possible – as it happened – and in a way that allowed us to scroll back if we had any suspicion that we had missed something.

tl;dr : We did this by performing thousands of manual searches, using carefully worked out keywords and Boolean searches performed by more than 600 journalism students in newsrooms and universities across the US.

What we looked for

ProPublica told us what we needed to look for on election day. It was then a case of splitting up the signals into manageable categories which might indicate voter suppression, but without creating an overwhelming feed for the person who was monitoring it.

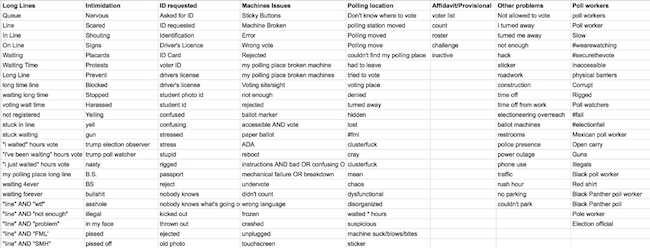

The problems we were looking for included:

- Long lines

- Voter intimidation

- ID Requests

- Machine issues

- Polling place location issues

- Affidavit or provisional ballots

- Poll worker problems

We then crowdsourced as many terms as possible that directly (or indirectly) related to these categories from within the project teams. The words we were specifically looking for were those that might actually be said by someone who was experiencing it in real-time and posting to social media, not just words that would describe it in news stories.

We also determined that a social signal could either be a statement that something was happening, or a request for information because someone suspected that something might be happening.

Once we had these social signals we either created Boolean searches and ran them natively on the social platforms, created vast numbers of columns, or slotted them into the various technologies that we had access to.

Technology

We were looking for posts, comments and live video from Facebook; Tweets, Periscopes, photos and videos from Twitter; photos and video from Instagram and interesting conversations surfacing on Reddit. We considered all of these signals to be valuable as they were all opportunities for voters to post updates in real-time. Most importantly, they were searchable.

We also used technology to help us find those signals.

Algorithmic tools such as Dataminr allowed Feeders to enter keywords and then leave it to algorithms to flag up when those keywords were connected to newsworthy social posts.

Banjo is already used by many student newsrooms across the country and so those J-Schools with access performed geofenced searches for the states and precincts they were specifically focused on.

We used AI through tools like Acusense* to search for the actual content of photos and videos posted to social platforms without relying on keywords or tags to find them.

Crowdtangle allowed us to search for pages on Facebook that would be relevant and everyone had access to Signal for the project which helped with geo-locational Instagram searches and subject based Facebook posts.

Tweetdeck was indispensable for sorting all the boolean searches that everyone had to perform as well as viewing Dataminr alerts.

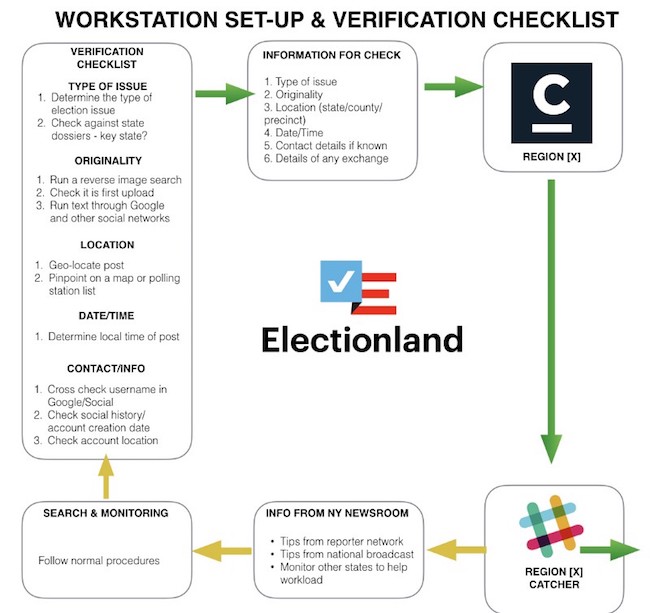

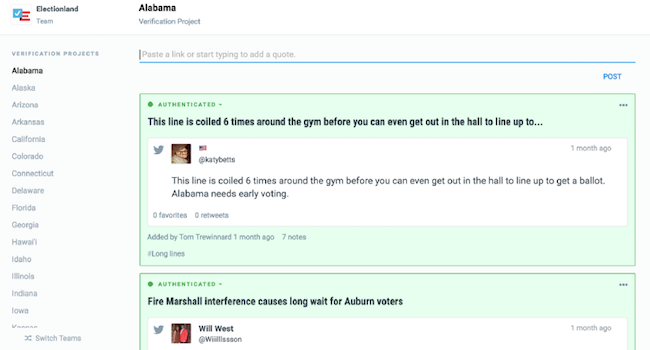

Check, a platform built by Meedan to help journalists collaborate on verification, allowed every Feeder to submit every signal or report to be picked up by the Catchers — journalists in Electionland’s main nesroom in New York tasked with verifying reports (more about them and the rest of the operation in future posts).

Every real-life workflow was complemented with a similar digital workflow in Check and about 1000 Electionland participants had access. Anything social that was caught would go through Check and enter the Electionland ecosystem where it could then be verified.

Fergus Bell/Dig Deeper. Check was the essential tool for organising all of the social newsgathering for verification

Application

We had the technology and we had the people power. Next came the application. How could we create a replicable, systematic approach to social monitoring for more than 600 people over 13 different locations and four timezones? I’ll be devoting a future post to the newsgathering and newsroom workflows, but here I want to focus on the specifics of rolling this out in a way that was both effective and reactive.

This combination of people, technology and real-time breaking news couldn’t be simulated. We couldn’t test in advance. So one of the major considerations was to find a system flexible enough to change, where necessary, once the polls were open and the reports started flying in.

Dividing the work

We split the country up into different regions based on data from previous elections. This data gave us an indication of areas which had a lot of reported problems in the past and meant we were able to do our best to split the work up evenly between the satellite newsrooms run by the J-Schools.

Louisiana State University students working in Electionland’s Baton Rouge, LA campus satellite newsroom. Image: Louisiana State University

Standardising the monitoring

We created standard monitoring systems that everyone worked to — the same keywords, the same tools and set-up — so that any tweaks could be rolled out to everyone at the same time and in the same way. The only difference between the regions was making social searches location specific, in order to keep them relevant.

What we standardised:

- Searches : When setting up searches across all of the platforms we standardised the way that it was done, going so far as to provide specific Boolean searches for everyone to use in the same way. This gave us the confidence that every region was being covered to the same standard.

- Tabs : Every tool, platform or search was set-up on a different tab within a web browser. We worked to the theory that systematically cycling through everything we had available to us was the only way to catch anything new and relevant throughout the day. By also splitting out the searches into categories, and not asking each tool to do too much at any one time, we knew that we could make sure nothing was missed.

- Columns : We asked everyone to set up the same columns in Tweetdeck. Some of them were pretty complex Boolean searches (more on this in a future post), others were displaying Dataminr alerts or regional lists.

- Tools: We set Dataminr, Krzana, Acusense* and Crowdtangle up in advance so they would just run in the background, giving us relevant pop-ups as we went about our tasks.

Standardising verification

As well as standardising the monitoring, we also needed to know what 600-plus people meant when they said something had been “verified”. A standard verification process and categorisation was essential — we didn’t want to exclude anything that was important, even if we couldn’t verify it immediately so nothing was ever deleted, just tagged differently until its exact status could be determined.

This is the verification process we asked every Feeder to follow:

- Type of issue : A quick categorisation of the type of issue they had discovered so that it would be tagged appropriately in Check.

- Originality: As far as possible we needed to know that this was the original post and not a scrape or someone re-sharing information.

- Location: If the post wasn’t tagged with a confirmable location then other searches had to be performed or clues picked up on in order to place the incident.

- Date/time : Everything was converted into Eastern time by those pulling claims into Check, especially important when many platforms date content using different criteria. We also needed to note any difference that might exist between the time the claim was made on social media and the time the event in question actually happened.

- Contact info: If a Feeder had communicated with a source using a platform or email address not contained in the attached social content then we needed it noted for any follow-ups.

Collaborative improvement

Quick communication was essential in order to keep everyone singing from the same hymn sheet, even when things started to change — and they certainly did — throughout the day.

Each satellite newsroom at the 12 off-site J-Schools was lead by a journalism professor who had received in person training and briefings — and that individual was the point person throughout the day. This meant changes only needed to be communicated to a dozen people who weren’t physically placed in the New York newsroom but information would still filter quickly through to every Feeder in the project.

Alabama students checking in with Electionland HQ in New York. Image: University of Alabama

We established a Google Hangout which we could use all day to communicate as quickly as possible.

We had stand-up meetings every two hours to communicate the latest changes and check in with those who might be struggling to keep up because of an increase in reports. This also helped to identify anyone who had additional capacity to chip in on different parts of the process.<

Early takeaways

Some preliminary analysis of this large-scale social newsgathering operation:

Owning discovery and verification: There were some easy tasks and some that were harder. With so many people working on social discovery, owning what you were working on from start to finish, rather than just working on what was easiest, was very important. For example, it was far quicker and easier to find reports through standardised monitoring and expert tools compared to putting content through the full verification process, which was naturally a more difficult and slower task. We had to ensure that if you found something you claimed it — and did so straight away — owning the piece from start to finish, from discovery to verification.

Technology can’t yet replace real people: Nothing replaces our intuition or the ability of a journalist to identify the smallest but most relevant detail integral to processing any claim or piece of content. New technologies for social newsgathering are incredible but they can’t run the show yet and they must work for you, not you for them.

Workflows , workflows, workflows (and workfl0ws): Having the right workflow was key to everything else falling into place. From standardising verification and communication channels to implementing a process for real-time troubleshooting. (This is something that will get a lot more attention in subsequent posts but requires a bit more analysis first.)

Trust your team: We trusted everyone who worked on this and if we didn’t recruit them directly we trusted those that did. Everyone pulled their weight, everyone had a huge passion for the project and everyone worked to the best of their ability — and then some. Trust the people you work with to do the job you ask of them.

Over the coming weeks we’ll be continuing to pick apart and analyse the lessons in social newsgathering that we learnt from our involvement in Electionland. Keep your eyes out for more deep dives.

*Author’s note: I am an advisor to Acusense and Krzana