At the start of the CNBC Republican debate Wednesday night, PolitiFact put out a call to its followers to help identify checkable statements:

If you hear something you’d like fact-checked, tweet at us with #PolitiFactThis #CNBCGOPDebate

— PolitiFact (@PolitiFact) October 29, 2015

At the same time the team of PolitiFact checkers were watching the debate and listening intently for claims, an automated system was scanning the live debate transcript and tweeting out checkable statements like these:

#CNBCGOPDebate in 2008, barack obama missed 60 or 70% of his votes and the same newspaper endorsed him again. — ClaimBuster (@ClaimBusterTM) October 29, 2015

#CNBCGOPDebate they built the great wall of china, that is 13,000 miles.

— ClaimBuster (@ClaimBusterTM) October 29, 2015

Those are statements made by debate participants that contain a factual claim. You could have easily handed them to a fact checker and asked them to see if they’re true or not.

ClaimBuster is the system powering that Twitter account. It was developed at the University of Texas-Arlington by Ph.D. student Naeemul Hassan, in collaboration with partners including Bill Adair, the c0-founder of PolitiFact who now runs the Duke Reporters’ Lab.

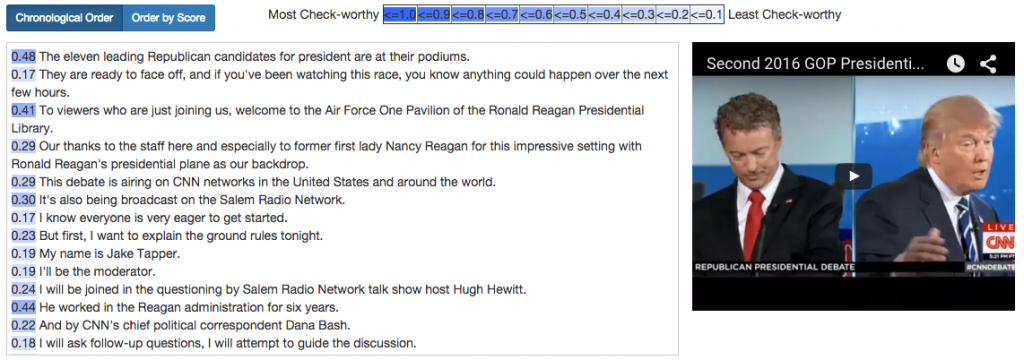

ClaimBuster quickly analyzes a selection of text and identifies what Hassan calls “Check-worthy Factual Sentences.” These sentences “contain factual claims and the general public will be interested in knowing whether the claims are true,” according to a research paper about ClaimBuster published by Hassan, Adair and five co-authors.

The system also assigns the sentences a score based on their “check-worthiness”. Here’s what that looked like during the September GOP debate (the darker the color, the more (check-worthy):

Screenshot from ClaimBuster

The authors write that ClaimBuster represents one piece of what could eventually be a fully automated fact-checking system. They call this the “Holy Grail” of fact-checking, while also acknowledging that an automated system is a complex an far-off goal.

“A fully automated fact-checker calls for fundamental breakthroughs in multiple fronts and, eventually, it represents a form of Artificial Intelligence (AI),” they write in the paper.

Along with being able to automatically identify checkable claims in real-time, the “Holy Grail” system would need to be able to compare the claims to a database of accurate and up-to-date checked facts that is comprehensive enough to check a wide range of claims. In a perfect scenario, the claim and the corresponding checked fact would be compared and the system would render an accurate verdict within a few seconds of the statement being made.

We may never get there. But ClaimBuster on its own could prove useful for verification and debunking.

The authors note that “human fact-checkers frequently have difficulty keeping up with the rapid spread of misinformation. Technology, social media and new forms of journalism have made it easier than ever to disseminate falsehoods and half-truths faster than the fact-checkers can expose them.”

The ability to rapidly identify new claims gaining velocity on social media could help journalists and others track, verify and, when necessary, debunk rumors in their early stages.

Identifying emerging claims

When using Emergent to track and debunk online rumors, one of the biggest challenges I faced was identifying new rumors early in their existence. It’s hard to keep up with the spread of misinformation. It’s really hard to identify rumors and soon-to-be-viral claims when they are in their infancy.

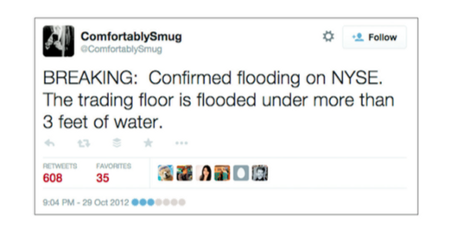

A viral false claim made during Hurricane Sandy

So I can imagine, for example, pointing ClaimBuster at a hashtag for a hurricane and watching as it extracts tweets that contain claims about the storm.

That could be a powerful way to track new rumors. Layer in the ability to sort the claims by keyword (“flood,” “dead,” “riot” etc.) and by the number of retweets and/or impressions over a timeframe and you have a rumor detection tool.

But as with the Holy Grail of an automated fact checking system, there are significant hurdles to getting us there. One of them is a familiar question of whether there is a big enough market for the ClaimBuster team to continue to pursue fact checking (or verification) as their product strategy.

The market for claim identification

As of today, ClaimBuster is a research project. Hassan and colleagues are spending the next seven weeks talking to newsrooms and others to see if and how it could be commercialized, and what the best market could be.

“We’re trying to find a customer segment where they have the need [for the product],” Hassan told me this week.

My honest feedback for him was that journalists and newsrooms are probably not that customer segment.

Even though fact checking is growing, it’s still not present in most newsrooms. Would a newsroom leader pay for a tool to pull out claims for them to check? My feeling is no. All that does it give them a list of claims that they have to find a journalist to check. It’s still very labor intensive — and there needs to be a strong argument as to why an editor would assign someone to go check claims as opposed to doing something else.

Would newsrooms pay for a tool that could analyze social media and identify new and possibly soon-to-be-viral claims? As a standalone product, I don’t see newsrooms paying for that. It strikes me as more of a feature within a product such as NewsWhip’s Spike, which is already helping newsrooms and others identify trending content.

The real opportunity, as is often the case, is to move away from thinking about newsrooms as customers and instead focus on brands. The social media team at McDonald’s might like to know that a claim about, say, where they source their beef is picking up traction online.

If you also used ClaimBuster to scan a database such as Nexis for new claims appearing in news articles, you could also have a powerful claim/rumor tracker for brands. PR firms would love to be able to see all the claims being made about their clients.

The same is true for political operations: tell them which claims about their candidate are out there, and show which ones are gaining traction. Or let them track the same information about an opponent. (The end result, of course, might be that candidate X would tempt to amplify and pour gasoline on an emerging false rumor about Candidate Y, even if it’s not true.)

This is a familiar scenario in that there are often products and new technologies that could help journalists, but newsrooms can be tough or unworkable as a primary market. The exception is if you have a product that can demonstrably help the organization earn revenue, grow traffic or cut costs. I don’t think fact-checking has a clear case in any of those areas right now.

But if a corporate market can be found and developed, newsrooms can become an attractive secondary sale. This is already the case for products such as Dataminr.

The point is that the best path for developing and commercializing fact checking technology is likely to first ensure it can be applied outside of the newsroom.

Our “Holy Grail” is only going to be found if other industries help fund the quest.