Background from kattefretter/Flickr. Dial and composite by Ali Abbas Ahmadi/First Draft. Some rights reserved.

Bot detection is no simple task. While academics and researchers agree on many of the metrics used to flag automated and co-ordinated online activity, no two models or algorithms are alike. And many of these models only apply when the relevant data has been collected, making it difficult for those of us working in breaking news or monitoring online activity in real-time to identify suspicious behaviour with confidence.

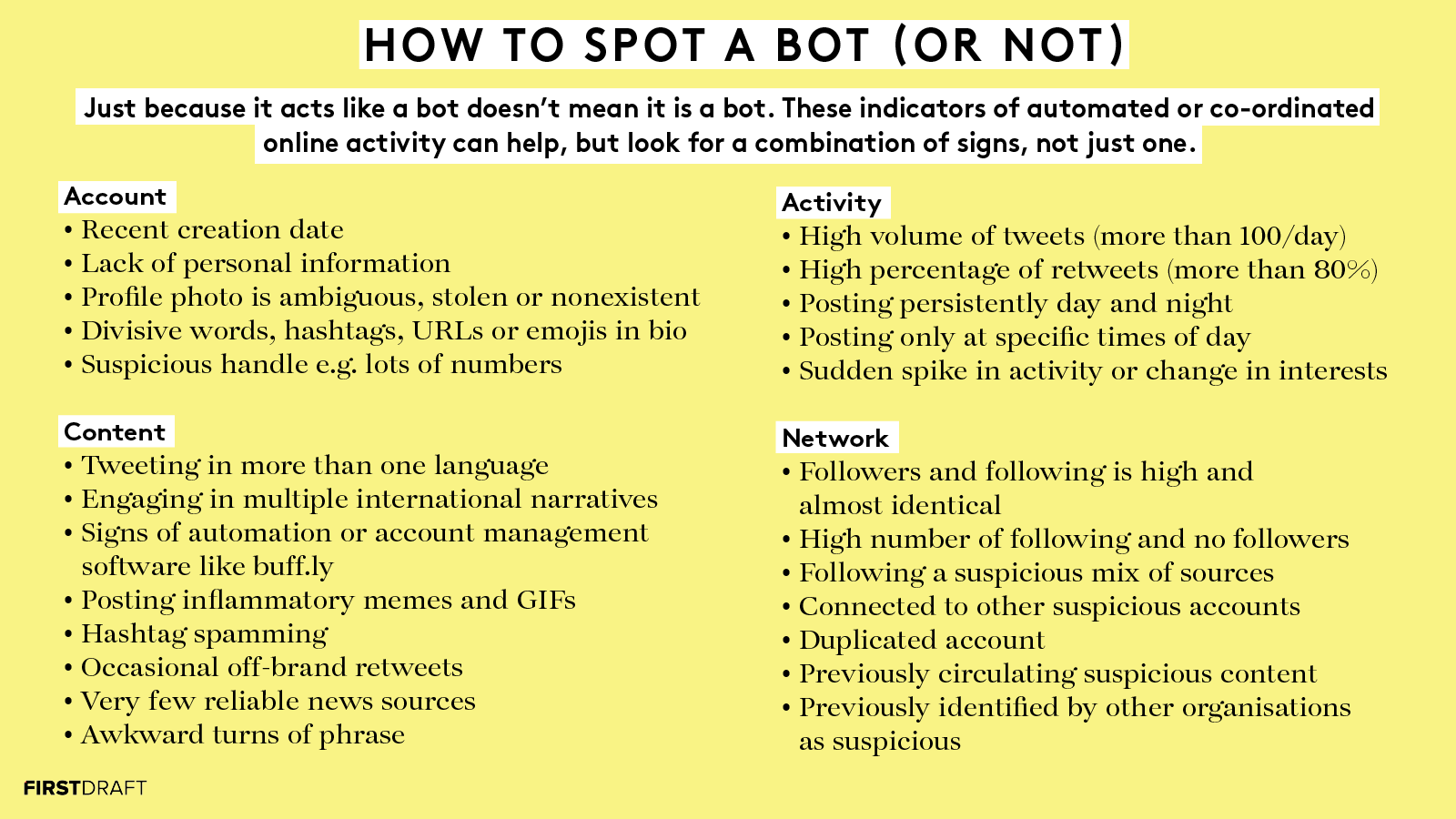

From talking with academics and researchers, studying the work of others, and carrying out our own investigations, First Draft has put together a list of indicators to help anyone identify suspicious online activity.

The list of indicators is broken down by category: the account’s pattern of activity, account information, content posted by the account, and network of other accounts it may be a part of. Within each category are different metrics which are red flags for automation.

Read: “The not-so-simple science of social media ‘bots'”

Given the complexity and imprecise nature of detecting bots, trolls, cyborgs, sock puppets or any other types of inauthentic accounts on social media, these metrics are not exhaustive. None of these indicators alone are enough to conclusively say whether an account is a bot, or not. Most of them, in isolation, could be seen as perfectly normal for some social media users.

But the more characteristics an account displays, the more likely it is to be automated or co-ordinated. If an account ticks ten indicators off the list there is a high likelihood it is inauthentic, although this judgement will always depend on the circumstances.

Taken together this list provide signals for anyone to identify bot-like accounts without the need for enormous dataservers, extensive coding skills or machine-learning algorithms.

First Draft

Account information

Creation date

A key indicator is the account’s creation date. An account or group of accounts that were created recently, such as in the last year or few months, can be used to flag suspicious or bot-like activity. At the same time, an account with an early creation date (2008-2012) which lays dormant and then exhibits a sudden spike in activity could also be an indicator of automation.

Bio info

Check whether the user profile lacks information about a real person and instead includes a smattering of hashtags, emojis, URLs and politically divisive or hyper-partisan words. Sometimes inauthentic accounts will include a lot of bland assertions which would be widely accepted in the target society such as “dog lover” or “sports fan”.

Suspicious handle

A handle or username that includes random sequences of numbers is suspicious. The username may have a generic name followed by numbers, or it may just be a random sequence of numbers.

Profile and background picture

Suspicious accounts often don’t include a profile picture. Or they may include stock photos or random images — cartoon characters, animals, landscapes, TV personalities — instead. If in doubt, reverse-image search a user’s profile photo to test its authenticity. A missing background photo could also be a signal of automation.

The account shows various signs of possible automation, including a recent creation date, a handle with random numbers, a lack of bio information and no background image.

Pattern of activity

High volumes of activity

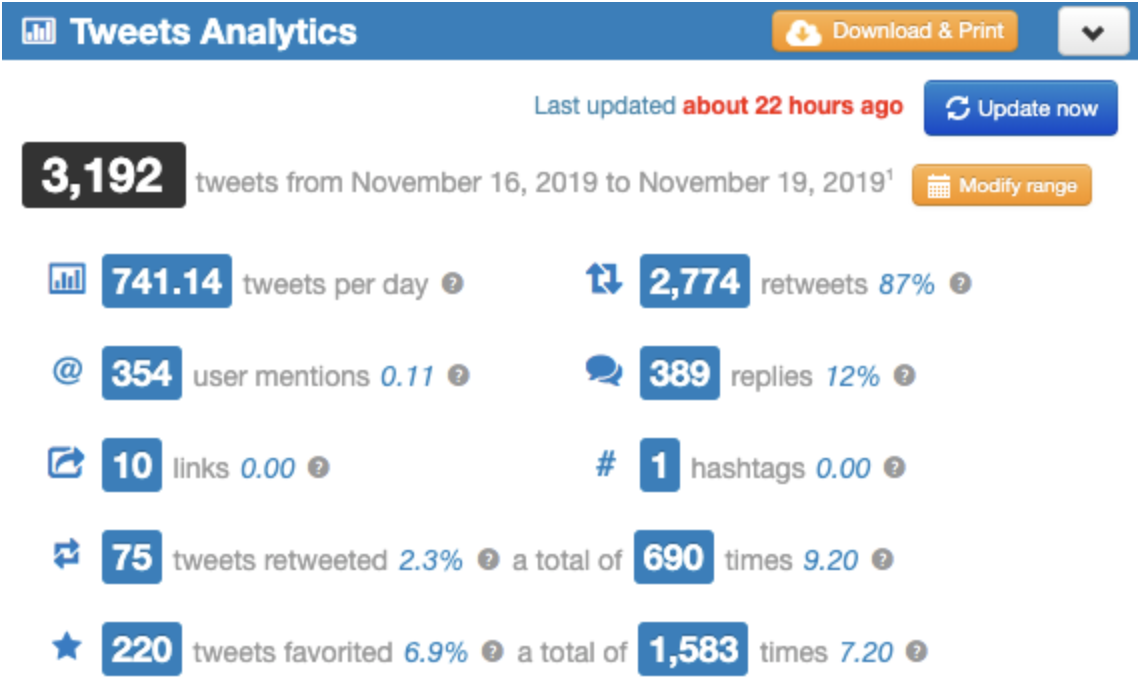

A high rate of activity is typically the first indicator of inauthenticity. However it is difficult to agree on fixed benchmarks. First Draft has set a minimum of 100 tweets a day as a general rule for flagging signs of automation.

You should always think about what kinds of accounts you are looking at in a network or dataset. Journalists and news organisations are likely to post more frequently than other communities, for example, so may appear to be bot-like.

A high percentage of retweets

Accounts that primarily retweet or like content from other users rather than tweeting original content can be flagged for signs of automation.

Pattern of activity

If accounts routinely tweet only on certain days or at certain times, such as at night or between certain hours, this is also a red flag of automation. Accounts that display a sudden spike in activity and then stop posting altogether can also be suspicious.

High numbers of tweets per day and a high percentage of retweets, such as seen in the above Twitonomy analytics, are signs of bot-like activity. Screenshot by author.

Content

International narratives/multiple languages

Analysing the content of a user is another way of determining whether or not an account exhibits bot-like characteristics. For example, does the account post in two or more languages? Does it post content about different and seemingly unrelated issues such as the yellow vests in France and Air Jordan sneakers?

Domains

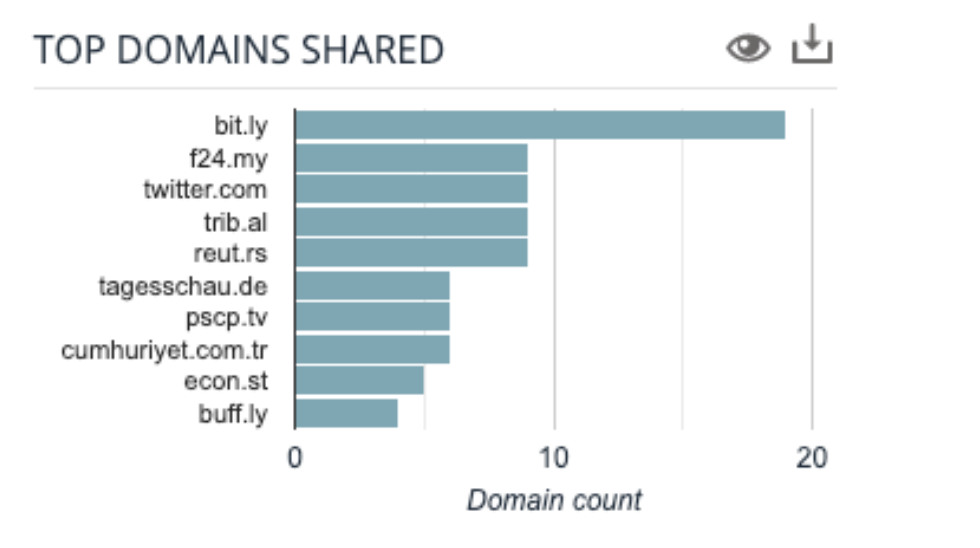

Accounts that share .ly domains, such buff.ly, bit.ly and ow.ly, or that using automation software, such as RTPoisson and IFTTT, which can be identified on analytics platforms such as SocialBearing, can often be key signs of automation.

Inflammatory memes/gifs

Is the account sharing or posting aggressive, inflammatory, defamatory, or misleading memes and gifs?

.ly links such as bit.ly and buff.ly are signs of bot-like behavior, which can be found on analytics platforms such as SocialBearing.

Hashtag spamming

Is the account posting only hashtags or a few words followed by a long list of hashtags, especially ones that are hyper-partisan or issue-related?

Links

Does the account only use particular ‘news’ sources for links? For example, does it use several media sources of the same political leaning? And are these news sources highly questionable?

Suspicious turns of phrase

Awkward language or phrasing in posts or bio descriptions can be a sign of inauthentic behaviour.

Network characteristics

Connections to multiple other accounts of interest

Does the account have connections to other accounts of interest? Is the account retweeting, liking or interacting with other suspicious accounts that you have identified? Is it only retweeting content from specific accounts? Inspecting who else an account interacts with, such as who it follows and who is following it, reveals a lot about the nature of the account.

Follow-for-follower strategy

Is the ratio of an account’s followers as well as those users it follows roughly close to 1, for example does an account have Follower: 5001 Following: 5003? This is particularly suspicious if the numbers of following and followers are in the thousands?

The Information Operations Archive is a good place to go to see if an account has interacted with other accounts involved in known influence operations.

Suspicious mix of followed sources

Is the account following local pages or accounts in various countries around the world? Inauthentic accounts, especially when operated by state-controlled agencies, sometimes try to influence users in a number of different countries.

Previously identified by other organisations

Has the account been flagged by other organisations monitoring and investigating information disorder, such as DFRLab, EUvsDisinfo or the Oxford Internet Institute? Does the account appear in The Information Operation Archive? There are researchers all over the world looking into automation in their regions and countries who may be able to give more information about an account or network.

Duplicate accounts

Is the account connected to another or others that are similar in appearance? Search for some of the exact phrases put out by a suspicious account. Are other accounts posting the exact same thing? Are the usernames or bio information the same or nearly the same? Are the accounts referencing or interacting with each other?

The arms race between bot creators and bot detectors will no doubt continue apace. But these indicators, when used collectively, will hopefully help anyone detect inauthentic activity online.

Rory Smith also contributed to this report.

Stay up to date with First Draft’s work by becoming a subscriber and follow us on Facebook and Twitter.