What is Twitonomy? A tool for analysing Twitter accounts and their activity. Also a great way to identify indicators of suspicious or bot-like behaviour on the platform.

Do I have to pay for it? It’s free, although you need the premium version for some more advanced features like downloading data or analysing lists.

Why would I use it? To analyse the behaviour of accounts by inspecting activity like the frequency of posts or volume of retweets. It is also useful for identifying who someone more regularly engages with on the platform.

There has been a lot of talk about “bots” and suspicious online behaviour since automated Russian accounts were found to have been active during the 2016 US election. Now, with the 2020 election just around the corner, there’s still not much clarity on what makes an online account genuinely suspicious.

First Draft’s recent investigation into pro-Trump Twitter accounts bolstering British Prime Minister Boris Johnson’s Brexit messaging is an example of how journalists can analyse Twitter activity to detect automated accounts which are potentially controlled by software. Part of this process involves looking to the source of those tweets and investigating their profile. And this is where Twitonomy can help.

Twitonomy is a nifty tool that can be used to observe a specific account’s activity and quickly identify signs of suspicious behaviour.

It won’t conclusively determine if an account is a bot but the tool can highlight major signs of inauthentic or automated behaviour to investigate further.

In last week’s article, we inspected individual accounts that retweeted the trending hashtag #TakeBackControl, which Johnson used in a tweet announcing he had agreed the terms of a Withdrawal Agreement with EU leaders on October 17.

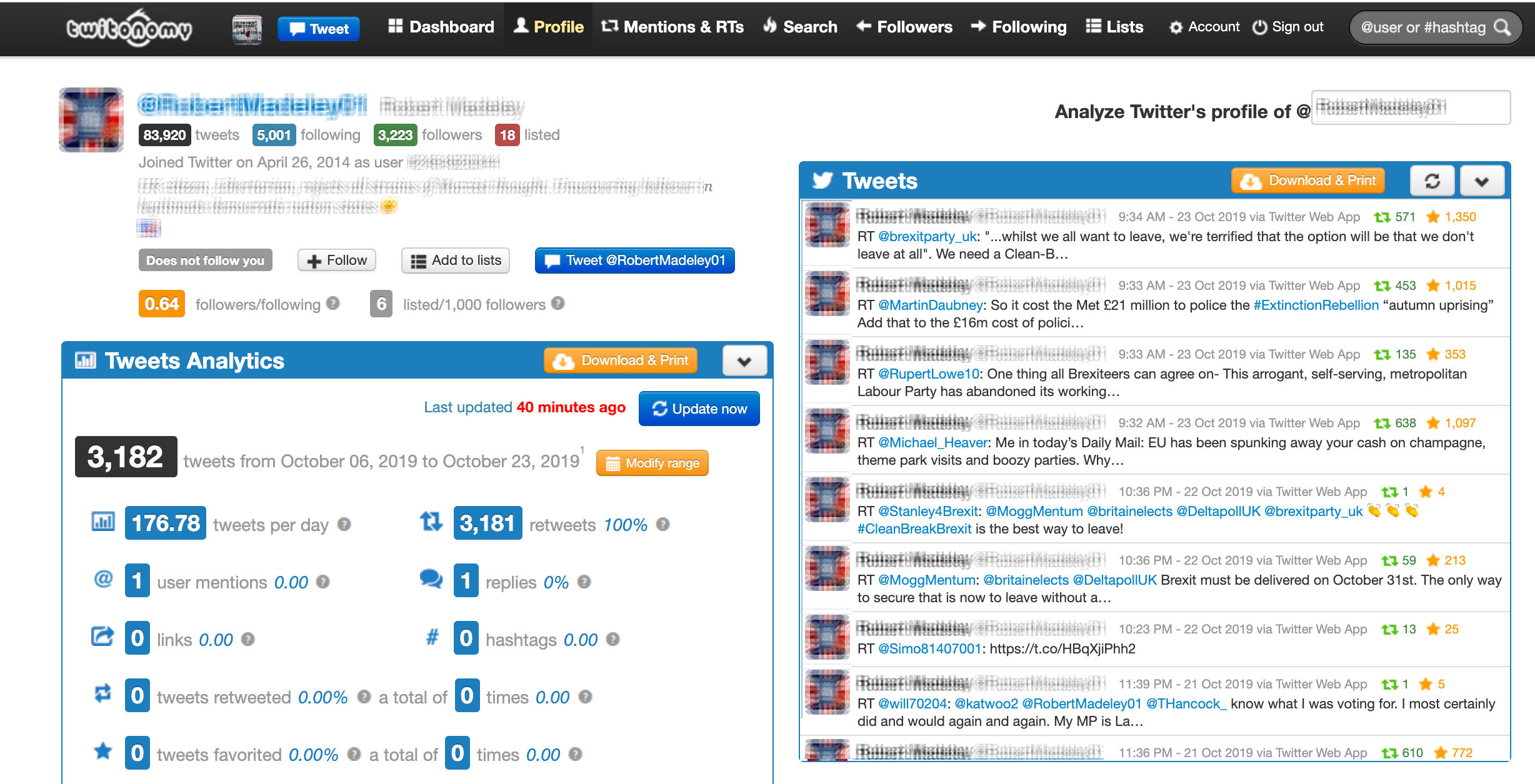

Pasting the username of an account into Twitonomy shows a range of metrics to better understand it. In this example, one user posted an average of 176.78 times per day over a week, according to Twitonomy. All but one of those posts were retweets.

Twitonomy allows users to see a range of analytics about a specific Twitter account. Screenshot: Twitonomy

This is not a sure sign of automation or bot-like behaviour, of course, but neither is it normal Twitter activity.

The Atlantic Council’s Digital Forensic Research Lab (DFRLab), which has spent recent years researching the behaviour of accounts linked to the Russian Internet Research Agency, said in 2017 that a consistent output of 72 tweets per day — one every ten minutes for a twelve hour-stretch — is cause for suspicion and more than 144 tweets per day is highly suspicious.

Twitonomy also gives a snappy overview of which users the account retweets the most, the users it regularly replies to, and the hashtags it uses and other information about its network of followers.

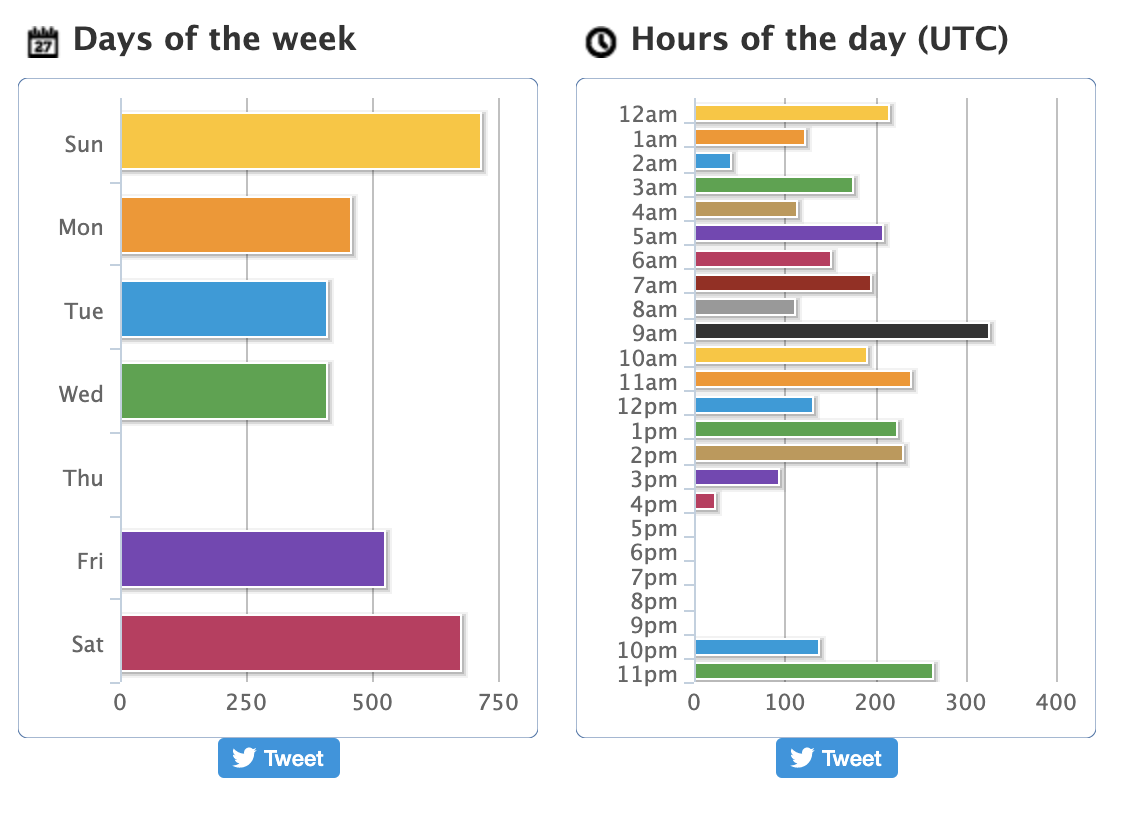

Checking the average time a user posts is also a quick way to identify patterns. If, for example, an account only posts at specific times or on certain days of the week, it could raise questions about whether those moments are defined by software.

The days and times a user posted on Twitter, showing explicit gaps in posting habits. Screenshot: Twitonomy

Alternatively, if an account posts constantly every day without time for sleep then it could be a sign of automation.

Inconsistent messaging (for example, if a user posts in support of the Hong Kong protests one day, and against it another) also suggests that an account is inauthentic.

Other red flags include a generic profile picture, multiple digits in the handle and retweeting other suspicious accounts.

More tips on things to look out for when identifying inauthentic accounts come from former First Draft partner Andy Carvin, who has tracked automated accounts for DFRLab.

Carvin shared advice on identifying bots at the 2019 Online News Association conference in New Orleans in September. None of the indicators mentioned here or those in his advice should be taken in isolation. Like with everything in verification, we are trying to gather as much information as possible to make an informed decision. And even then we may not know with absolute certainty.

Read his guidance and the DFRLab’s ten indicators which can help identify a bot.

Remember, it is very difficult to verify for sure whether or not an account is automated. Carvin recommended describing users suspected of automation as “exhibiting bot-like behaviour” to prevent embarrassing mistakes and assumptions.

Stay up to date with First Draft’s work by becoming a subscriber and following us on Facebook and Twitter.